8

Matthew Knachel

Inductive Logic II: Probability and Statistics

I. The Probability Calculus

Inductive arguments, recall, are arguments whose premises support their conclusions insofar as they make them more probable. The more probable the conclusion in light of the premises, the stronger the argument; the less probable, the weaker. As we saw in the last chapter, it is often impossible to say with any precision exactly how probable the conclusion of a given inductive argument is in light of its premises; often, we can only make relative judgments, noting that one argument is stronger than another, because the conclusion is more probable, without being able to specify just how much more probable it is.

Sometimes, however, it is possible to specify precisely how probable the conclusion of an inductive argument is in light of its premises. To do that, we must learn something about how to calculate probabilities; we must learn the basics of the probability calculus. This is the branch of mathematics dealing with probability computations.[1] We will cover its most fundamental rules and learn to perform simple calculations. After that preliminary work, we use the tools provided by the probability calculus to think about how to make decisions in the face of uncertainty, and how to adjust our beliefs in the light of evidence. We will consider the question of what it means to be rational when engaging in these kinds of reasoning activities.

Finally, we will turn to an examination of inductive arguments involving statistics. Such arguments are of course pervasive in public discourse. Building on what we learned about probabilities, we will cover some of the most fundamental statistical concepts. This will allow us to understand various forms of statistical reasoning—from different methods of hypothesis testing to sampling techniques. In addition, even a rudimentary understanding of basic statistical concepts and reasoning methods will put us in a good position to recognize the myriad ways in which statistics are misunderstood, misused, and deployed with the intent to manipulate and deceive. As Mark Twain said, “There are three kinds of lies: lies, damned lies, and statistics.”[2] Advertisers, politicians, pundits—everybody in the persuasion business—trot out statistical claims to bolster their arguments, and more often than not they are either deliberately or mistakenly committing some sort of fallacy. We will end with a survey of these sorts of errors.

But first, we examine the probability calculus. Our study of how to compute probabilities will divide neatly into two sections, corresponding to the two basic types of probability calculations one can make. There are, on the one hand, probabilities of multiple events all occurring—or, equivalently, multiple propositions all being true; call these conjunctive occurrences. We will first learn how to calculate the probabilities of conjunctive occurrences—that this event and this other event and some other event and so on will occur. On the other hand, there are probabilities that at least one of a set of alternative events will occur—or, equivalently, that at least one of a set of propositions will be true; call these disjunctive occurrences. In the second half of our examination of the probability calculus we will learn how to calculate the probabilities of disjoint occurrences— that this event or this other event or some other event or… will occur.

Conjunctive Occurrences

Recall from our study of sentential logic that conjunctions are, roughly, ‘and’-sentences. We can think of calculating the probability of conjunctive occurrences as calculating the probability that a particular conjunction is true. If you roll two dice and want to know your chances of getting “snake eyes” (a pair of ones), you’re looking for the probability that you’ll get a one on the first die and a one on the second.

Such calculations can be simple or slightly more complex. What distinguishes the two cases is whether or not the events involved are independent. Events are independent when the occurrence of one has no effect on the probability that any of the others will occur. Consider the dice mentioned above. We considered two events: one on die #1, and one on die #2. Those events are independent. If I get a one on die #1, that doesn’t affect my chances of getting a one on the second die; there’s no mysterious interaction between the two dice, such that what happens with one can affect what happens with the other. They’re independent.[3] On the other hand, consider picking two cards from a standard deck (and keeping them after they’re drawn).[4] Here are two events: the first card is a heart, the second card is a heart. Those events are not independent. Getting a heart on the first draw affects your chances of getting a second heart (it makes the second heart less likely).

When events are independent, things are simple. We calculate the probability of their conjunctive occurrence by multiplying the probabilities of their individual occurrences. This is the Simple Product Rule:

P(a • b • c • …) = P(a) x P(b) x P(c) x …

This rule is abstract; it covers all cases of the conjunctive occurrence of independent events. ‘a’, ‘b’, and ‘c’ refer to events; the ellipses indicate that there may be any number of them. When we write ‘P’ followed by something in parentheses, that’s just the probability of the thing in parentheses coming to pass. On the left-hand side of the equation, we have a bunch of events with dots in between them. The dot means the same thing it did in SL: it’s short for and. So this equation just tells us that to compute the probability of a and b and c (and however many others there are) occurring, we just multiply together the individual probabilities of those events occurring on their own.

Go back to the dice above. We roll two dice. What’s the probability of getting a pair of ones? The events—one on die #1, one on die #2—are independent, so we can use the Simple Product Rule and just multiply together their individual probabilities.

What are those probabilities? We express probabilities as numbers between 0 and 1. An event with a probability of 0 definitely won’t happen (a proposition with a probability of 0 is certainly false); an event with a probability of 1 definitely will happen (a proposition with a probability of 1 is certainly true). Everything else is a number in between: closer to 1 is more probable; closer to 0, less. So, how probable is it for a rolled die to show a one? There are six possible outcomes when you roll a die; each one is equally likely. When that’s the case, the probability of the particular outcome is just 1 divided by the number of possibilities. The probability of rolling a one is 1/6.

So, we calculate the probability of rolling “snake eyes” as follows:

P(one on die #1 • one on die #2) = P(one on die #1) x P(one on die #2)

= 1/6 x 1/6

= .0278

If you roll two dice a whole bunch of times, you’ll get a pair of one a little less than 3% of the time.

We noted earlier that if you draw two cards from a deck, two possible outcomes—first card is a heart, second card is a heart —are not independent. So we couldn’t calculate the probability of getting two spades using the Simple Product Rule. We could only do that if we made the two events independent—if we stipulated that after drawing the first card, you put it (randomly) back into the deck, so you’re picking at random from a full deck of cards each time. In that case, you’ve got a 1/4 chance of picking a heart each time, so the probability of picking two in a row would be 1/4 x 1/4—and the probability of picking three in a row would be 1/4 x 1/4 x 1/4, and so on.

Of course the more interesting question—and the more practical one, if you’re a card player looking for an edge—is the original one: what’s the probability of, say, drawing three hearts assuming, as is the case in all real-life card games, that you keep the cards as you draw them? As we noted, these events— heart on the first card, heart on the second card, heart on the third card— are not independent, because each time you succeed in drawing a heart, that affects your chances (negatively) of drawing another one. Let’s think about this effect in the current case. The probability of drawing the first heart from a well-shuffled, complete deck is simple: 1/4. It’s the subsequent hearts that are complicated. How much of an effect does success at drawing that first heart have on the probability of drawing the second one? Well, if we’ve already drawn one heart, the deck from which we’re attempting to draw the second is different from the original, full deck: specifically, it’s short the one card already drawn—so there are only 51 total—and it’s got fewer hearts now—12 instead of the original 13. 12 out of the remaining 51 cards are hearts, then. So the probability of drawing a second heart, assuming the first one has already been picked, is 12/51. If we succeed in drawing the second heart, what are our chances at drawing a third? Again, in this case, the deck is different: we’re now down to 50 total cards, only 11 of which are hearts. So the probability of getting the third heart is 11/50.

It’s these fractions—1/4, 12/51, and 11/50—that we must multiply together to determine the probability of drawing three straight hearts while keeping the cards. The result is (approximately) .013—a lower probability than that of picking 3 straight hearts when the cards are not kept, but replaced after each selection: 1/4 x 1/4 x 1/4 = .016 (approximately). This is as it should be: it’s harder to draw three straight hearts when the cards are kept, because each success diminishes the probability of drawing another heart. The events are not independent.

In general, when events are not independent, we have to make the same move that we made in the three-hearts case. Rather than considering the stand-alone probability of a second and third heart— as we could in the case where the events were independent—we had to consider the probability of those events assuming that other events had already occurred. We had to ask what the probability was of drawing a second heart, given that the first one had already been drawn; then we asked after the probability of drawing the third heart, given that the first two had been drawn.

We call such probabilities—the likelihood of an event occurring assuming that others have occurred—conditional probabilities. When events are not independent, the Simple Product Rule does not apply; instead, we must use the General Product Rule:

P(a • b • c • …) = P(a) x P(b | a) x P(c | a • b) x …

The term ‘P(b | a)’ stands for the conditional probability of b occurring, provided a already has. The term ‘P(c | a • b)’ stands for the conditional probability of c occurring, provided a and b already have. If there were a fourth event, d, we would have a this term on the right-hand side of the equation: ‘P(d | a • b • c)’. And so on.

Let’s reinforce our understanding of how to compute the probabilities of conjunctive occurrences with a sample problem:

There is an urn filled with marbles of various colors. Specifically, it contains 20 red marbles, 30 blue marbles, and 50 white marbles. If we select 4 marbles from the earn at random, what’s the probability that all four will be blue, (a) if we replace each marble after drawing it, and (b) if we keep each marble after drawing it?

Let’s let ‘B1’ stand for the event of picking a blue marble on the first selection; and we’ll let ‘B2’, ‘B3’, and ‘B4’ stand for the events of picking blue on the second, third, and fourth selections, respectively. We want the probability of all of these events occurring:

P(B1 • B2 • B3 • B4) = ?

If we replace each marble after drawing it, then the events are independent: selecting blue on one drawing doesn’t affect our chances of selecting blue on any other; for each selection, the urn has the same composition of marbles. Since the events are independent in this case, we can use the Simple Product Rule to calculate the probability:

P(B1 • B2 • B3 • B4) = P(B1) x P(B2) x P(B3) x P(B4)

And since there are 100 total marbles in the urn, and 30 of them are blue, on each selection we have a 30/100 (= .3) probability of picking a blue marble.

P(B1 • B2 • B3 • B4) = .3 x .3 x .3 x .3 = .0081

If we don’t replace the marbles after drawing them, then the events are not independent: each successful selection of a blue marble affects our chances (negatively) of drawing another blue marble. When events are not independent, we need to use the General Product Rule:

P(B1 • B2 • B3 • B4) = P(B1) x P(B2 | B1) x P(B3 | B1 • B2) x P(B4 | B1 • B2 • B3)

On the first selection, we have the full urn, so P(B1) = 30/100. But for the second term in our product, we have the conditional probability P(B2 | B1); we want to know the chances of selecting a second blue marble on the assumption that the first one has already been selected. In that situation, there are only 99 total marbles left, and 29 of them are blue. For the third term in our product, we have the conditional probability P(B3 | B1 • B2); we want to know the chances of drawing a third blue marble on the assumption that the first and second ones have been selected. In that situation, there are only 98 total marbles left, and 28 of them are blue. And for the final term—P(B4 | B1 • B2 • B3)—we want the probability of a fourth blue marble, assuming three have already been picked; there are 27 left out of a total of 97.

P(B1 • B2 • B3 • B4) = 30/100 x 29/99 x 28/98 x 27/97 = .007 (approximately)

Disjunctive Occurrences

Conjunctions are (roughly) ‘and’-sentences. Disjunctions are (roughly) ‘or’-sentences. So we can think of calculating the probability of disjunctive occurrences as calculating the probability that a particular disjunction is true. If, for example, you roll a die and you want to know the probability that it will come up with an odd number showing, you’re looking for the probability that you’ll roll a one or you’ll roll a three or you’ll roll a five.

As was the case with conjunctive occurrences, such calculations can be simple or slightly more complex. What distinguishes the two cases is whether or not the events involved are mutually exclusive. Events are mutually exclusive when at most one of them can occur—when the occurrence of one precludes the occurrence of any of the others. Consider the die mentioned above. We considered three events: it comes up showing one, it comes up showing three, and it comes up showing five. Those events are mutually exclusive; at most one of them can occur. If I roll a one, that means I can’t roll a three or a five; if I roll a three, that means I can’t roll a one or a five; and so on. (At most one of them can occur; notice, it’s possible that none of them occur.) On the other hand, consider the dice example from earlier: rolling two dice, with the events under consideration rolling a one on die #1 and rolling a one on die #2. These events are not mutually exclusive. It’s not the case that at most one of them could happen; they could both happen—we could roll snake eyes.

When events are mutually exclusive, things are simple. We calculate the probability of their disjunctive occurrence by adding the probabilities of their individual occurrences. This is the Simple Addition Rule:

P(a ∨ b ∨ c ∨ …) = P(a) + P(b) + P(c) + …

This rule exactly parallels the Simple Product Rule from above. We replace that rule’s dots with wedges, to reflect the fact that we’re calculating the probability of disjunctive rather than conjunctive occurrences. And we replace the multiplication signs with additions signs on the righthand side of the equation to reflect the fact that in such cases we add rather than multiply the individual probabilities.

Go back to the die above. We roll it, and we want to know the probability of getting an odd number. There are three mutually exclusive events—rolling a one, rolling a three, and rolling a five—and we want their disjunctive probability; that’s P(one ∨ three ∨ five). Each individual event has a probability of 1/6, so we calculate the disjunctive occurrence with the Simple Addition Rule thus:

P(one ∨ three ∨ five) = P(one) + P(three) + P(five)

= 1/6 + 1/6 + 1/6 = 3/6 = 1/2

This is a fine result, because it’s the result we knew was coming. Think about it: we wanted to know the probability of rolling an odd number; half of the numbers are odd, and half are even; so the answer better be 1/2. And it is.

Now, when events are not mutually exclusive, the Simple Addition Rule cannot be used; its results lead us astray. Consider a very simple example: flip a coin twice; what’s the probability that you’ll get heads at least once? That’s a disjunctive occurrence: we’re looking for the probability that you’ll get heads on the first toss or heads on the second toss. But these two events—heads on toss #1, heads on toss #2—are not mutually exclusive. It’s not the case that at most one can occur; you could get heads on both tosses. So in this case, the Simple Addition Rule will give us screwy results. The probability of tossing heads is 1/2, so we get this:

P(heads on #1 ∨ heads on #2) = P(heads on #1) + P(heads on #2)

= 1/2 + 1/2 = 1 [WRONG!]

If we use the Simple Addition Rule in this case, we get the result that the probability of throwing heads at least once is 1; that is, it’s absolutely certain to occur. Talk about screwy! We’re not guaranteed to gets heads at least once; we could toss tails twice in a row.

In cases such as this, where we want to calculate the probability of the disjunctive occurrence of events that are not mutually exclusive, we must do so indirectly, using the following universal truth:

P(success) = 1 – P(failure)

This formula holds for any event or combination of events whatsoever. It says that the probability of any occurrence (singular, conjunctive, disjunctive, whatever) is equal to 1 minus the probability that it does not occur. ‘Success’ = it happens; ‘failure’ = it doesn’t. Here’s how we arrive at the formula. For any occurrence, there are two possibilities: either it will come to pass or it will not; success or failure. It’s absolutely certain that at least one of these two will happen; that is, P(success failure) = 1. Success and failure are (obviously) mutually exclusive outcomes (they can’t both happen). So we can express P(success failure) using the Simple Addition Rule: P(success failure) = P(success) + P(failure). And as we’ve already noted, P(success failure) = 1, so P(success) + P(failure) = 1. Subtracting P(failure) from each side of the equation gives us our universal formula: P(success) = 1 – P(failure).

Let’s see how this formula works in practice. We’ll go back to the case of flipping a coin twice. What’s the probability of getting at least one head? Well, the probability of succeeding in getting at least one head is just 1 minus the probability of failing. What does failure look like in this case? No heads; two tails in a row. That is, tails on the first toss and tails on the second toss. See that ‘and’ in there? (I italicized it.) This was originally a disjunctive-occurrence calculation; now we’ve got a conjunctive occurrence calculation. We’re looking for the probability of tails on the first toss and tails on the second toss:

P(tails on toss #1 • tails on toss #2) = ?

We know how to do problems like this. For conjunctive occurrences, we need first to ask whether the events are independent. In this case, they clearly are. Getting tails on the first toss doesn’t affect my chances of getting tails on the second. That means we can use the Simple Product Rule:

P(tails on toss #1 • tails on toss #2) = P(tails on toss #1) x P(tails on toss #2)

= 1/2 x 1/2 = 1/4

Back to our universally true formula: P(success) = 1 – P(failure). The probability of failing to toss at least one head is 1/4. The probability of succeeding in throwing at least one head, then, is just 1 – 1/4 = 3/4.[5]

So, generally speaking, when we’re calculating the probability of disjunctive occurrences and the events are not mutually exclusive, we need to do so indirectly, by calculating the probability of the failure of any of the disjunctive occurrences to come to pass and subtracting that from 1. This has the effect of turning a disjunctive occurrence calculation into a conjunctive occurrence calculation: the failure of a disjunction is a conjunction of failures. This is a familiar point from our study of SL in Chapter 4. Failure of a disjunction is a negated disjunction; negated disjunctions are equivalent to conjunctions of negations. This is one of DeMorgan’s Theorems:

~ (p ∨ q) ≡ ~ p • ~ q

Let’s reinforce our understanding of how to compute probabilities with another sample problem. This problem will involve both conjunctive and disjunctive occurrences.

There is an urn filled with marbles of various colors. Specifically, it contains 20 red marbles, 30 blue marbles, and 50 white marbles. If we select 4 marbles from the urn at random, what’s the probability that all four will be the same color, (a) if we replace each marble after drawing it, and (b) if we keep each marble after drawing it? Also, what’s the probability that at least one of our four selections will be red, (c) if we replace each marble after drawing it, and (d) if we keep each marble after drawing it?

This problem splits into two: on the one hand, in (a) and (b), we’re looking for the probability of drawing four marbles of the same color; on the other hand, in (c) and (d), we want the probability that at least one of the four will be red. We’ll take these two questions up in turn.

First, the probability that all four will be the same color. We dealt with a narrower version of this question earlier when we calculated the probability that all four selections would be blue. But the present question is broader: we want to know the probability that they’ll all be the same color, not just one color (like blue) in particular, but any of the three possibilities—red, white, or blue. There are three ways we could succeed in selecting four marbles of the same color: all four red, all four white, or all four blue. We want the probability that one of these will happen, and that’s a disjunctive occurrence:

P(all 4 red ∨ all 4 white ∨ all 4 blue) = ?

When we are calculating the probability of disjunctive occurrences, our first step is to ask whether the events involved are mutually exclusive. In this case, they clearly are. At most, one of the three events—all four red, all four white, all four blue—will happen (and probably none of them will); we can’t draw four marbles and have them all be red and all be white, for example. Since the events are mutually exclusive, we can use the Simple Addition Rule to calculate the probability of their disjunctive occurrence:

P(all 4 red ∨ all 4 white ∨ all 4 blue) = P(all 4 red) + P(all 4 white) + P(all 4 blue)

So we need to calculate the probabilities for each individual color—that all will be red, all white, and all blue—and add those together. Again, this is the kind of calculation we did earlier, in our first practice problem, when we calculated the probability of all four marbles being blue. We just have to do the same for red and white. These are calculations of the probabilities of conjunctive occurrences:

P(R1 • R2 • R3 • R4) = ?

P(W1 • W2 • W3 • W4) = ?

(a) If we replace the marbles after drawing them, the events are independent, and so we can use the Simple Product Rule to do our calculations:

P(R1 • R2 • R3 • R4) = P(R1) x P(R2) x P(R3) x P(R4)

P(W1 • W2 • W3 • W4) = P(W1) x P(W2) x P(W3) x P(W4)

Since 20 of the 100 marbles are red, the probability of each of the individual red selections is .2; since 50 of the marbles are white, the probability for each white selection is .5.

P(R1 • R2 • R3 • R4) = .2 x .2 x .2 x .2 = .0016

P(W1 • W2 • W3 • W4) = .5 x .5 x .5 x .5 = .0625

In our earlier sample problem, we calculated the probability of picking four blue marbles: .0081. Putting these together, the probability of picking four marbles of the same color:

P(all 4 red ∨ all 4 white ∨ all 4 blue) = P(all 4 red) + P(all 4 white) + P(all 4 blue)

= .0016 + .0625 + .0081

= .0722

(b) If we don’t replace the marbles after each selection, the events are not independent, and so we must use the General Product Rule to do our calculations. The probability of selecting four red marbles is this:

P(R1 • R2 • R3 • R4) = P(R1) x P(R2 | R1) x P(R3 | R1 • R2) x P(R4 | R1 • R2 • R3)

We start with 20 out of 100 red marbles, so P(R1) = 20/100. On the second selection, we’re assuming the first red marble has been drawn already, so there are only 19 red marbles left out of a total of 99; P(R2 | R1) = 19/99. For the third selection, assuming that two red marbles have been drawn, we have P(R3 | R1 • R2) = 18/98. And on the fourth selection, we have P(R4 | R1 • R2 • R3) = 17/97.

P(R1 • R2 • R3 • R4) = 20/100 x 19/99 x 18/98 x 17/97 = .0012 (approximately)

The same considerations apply to our calculation of drawing four white marbles, except that we start with 50 of those on the first draw:

P(W1 • W2 • W3 • W4) = 50/100 x 49/99 x 48/98 x 47/97 = .0587 (approximately)

In our earlier sample problem, we calculated the probability of picking four blue marbles as .007. Putting these together, the probability of picking four marbles of the same color:

P(all 4 red ∨ all 4 white ∨ all 4 blue) = P(all 4 red) + P(all 4 white) + P(all 4 blue)

= .0012 + .0587 + .007

= .0669 (approximately)

As we would expect, there’s a slightly lower probability of selecting four marbles of the same color when we don’t replace them after each selection.

We turn now to the second half of the problem, in which we are asked to calculate the probability that at least one of the four marbles selected will be red. The phrase ‘at least one’ is a clue: this is a disjunctive occurrence problem. We want to know the probability that the first marble will be red or the second will be red or the third or the fourth:

P(R1 ∨ R2 ∨ R3 ∨ R4) = ?

When our task is to calculate the probability of disjunctive occurrences, the first step is to ask whether the events are mutually exclusive. In this case, they are not. It’s not the case that at most one of our selections will be a red marble; we could pick two or three or even four (we calculated the probability of picking four just a minute ago). That means that we can’t use the Simple Addition Rule to make this calculation. Instead, we must calculate the probability indirectly, relying on the fact that P(success) = 1 – P(failure). We must subtract the probability that we don’t select any red marbles from 1:

P(R1 ∨ R2 ∨ R3 ∨ R4) = 1 – P(no red marbles)

As is always the case, the failure of a disjunctive occurrence is just a conjunction of individual failures. Not getting any red marbles is failing to get a red marble on the first draw and failing to get one on the second draw and failing on the third and on the fourth:

P(R1 ∨ R2 ∨ R3 ∨ R4) = 1 – P(~ R1 • ~ R2 • ~ R3 • ~ R4)

In this formulation, ‘~ R1’ stands for the eventuality of not drawing a red marble on the first selection, and the other terms for not getting red on the subsequent selections. Again, we’re just borrowing symbols from SL.

Now we’ve got a conjunctive occurrence problem to solve, and so the question to ask is whether the events ~ R1, ~ R2, and so on are independent or not. And the answer is that it depends on whether we replace the marbles after drawing them or not.

If we replace the marbles after each selection, then failure to pick red on one selection has no effect on the probability of failing to select red subsequently. It’s the same urn— with 20 red marbles out of 100—for every pick. In that case, we can use the Simple Product Rule for our calculation:

P(R1 ∨ R2 ∨ R3 ∨ R4) = 1 – [P(~ R1) x P(~ R2) x P(~ R3) x P(~ R4)]

Since there are 20 red marbles, there are 80 non-red marbles, so the probability of picking a color other than red on any given selection is .8.

P(R1 ∨ R2 ∨ R3 ∨ R4) = 1 – (.8 x .8 x .8 x .8)

= 1 – .4096

= .5904

If we don’t replace the marbles after each selection, then the events are not independent, and we must use the General Product Rule for our calculation. The quantity that we subtract from 1 will be this:

P(~ R1) x P(~ R2 | ~ R1) x P(~ R3 | ~ R1 • ~ R2) x P(~ R4 | ~ R1 • ~ R2 • ~ R3) = ?

On the first selection, our chances of picking a non-red marble are 80/100. On the second selection, assuming we chose a non-red marble the first time, our chances are 79/99. And on the third and fourth selections, the probabilities are 78/98 and 77/97, respectively. Multiplying all these together, we get .4033 (approximately), and so our calculation of the probability of getting at least one red marble looks like this:

P(R1 ∨ R2 ∨ R3 ∨ R4) = 1 – .4033 = .5967 (approximately)

We have a slightly better chance at getting a red marble if we don’t replace them, since each selection of a non-red marble makes the urn’s composition a little more red-heavy.

EXERCISES

- Flip a coin 6 times; what’s the probability of getting heads every time?

- Go into a racquetball court and use duct tape to divide the floor into four quadrants of equal area. Throw three super-balls in random directions against the walls as hard as you can. What’s the probability that all three balls come to rest in the same quadrant?

- You’re at your grandma’s house for Christmas, and there’s a bowl of holiday-themed M&Ms— red and green ones only. There are 500 candies in the bowl, with equal number of each color. Pick one, note its color, then eat it. Pick another, note its color, and eat it. Pick a third, note its color, and eat it. What’s the probability that you ate three straight red M&Ms?

- You and two of your friends enter a raffle. There is one prize: a complete set of Ultra Secret Rare Pokémon cards. There are 1000 total tickets sold; only one is the winner. You buy 20, and your friends each buy 10. What’s the probability that one of you wins those Pokémon cards?

- You’re a 75% free-throw shooter. You get fouled attempting a 3-point shot, which means you get 3 free-throw attempts. What’s the probability that you make at least one of them?

- Roll two dice; what’s the probability of rolling a seven? How about an eight?

- In my county, 70% of people believe in Big Foot. Pick three people at random. What’s the probability that at least one of them is a believer in Big Foot?

- You see these two boxes here on the table? Each of them has jelly beans inside. We’re going to play a little game, at the end of which you have to pick a random jelly bean and eat it. Here’s the deal with the jelly beans. You may not be aware of this, but food scientists are able to create jelly beans with pretty much any flavor you want—and many you don’t want. There is, in fact, such a thing as vomit-flavored jelly beans.[6] Anyway, in one of my two boxes, there are 100 total jelly beans, 8 of which are vomit-flavored (the rest are normal fruit flavors). In the other box, I have 50 jelly beans, 7 of which are vomit-flavored. Remember, this all ends with you choosing a random jelly bean and eating it. But you have a choice between two methods of determining how it will go down: (a) You flip a coin, and the result of the flip determines which of the two boxes you choose a jelly bean from; (b) I dump all the jelly beans into the same box and you pick from that. Which option do you choose? Which one minimizes the probability that you’ll end up eating a vomit-flavored jelly bean? Or does it not make any difference?

- For men entering college, the probability that they will finish a degree within four years is .329; for women, it’s .438. Consider two freshmen—Albert and Betty. What’s the probability that at least one of them will fail to complete college in at least four years? What’s the probability that exactly one of them will succeed in doing so?

- I love Chex Mix. My favorite things in the mix are those little pumpernickel chips. But they’re relatively rare compared to the other ingredients. That’s OK, though, since my second-favorite are the Chex pieces themselves, and they’re pretty abundant. I don’t know what the exact ratios are, but let’s suppose that it’s 50% Chex cereal, 30% pretzels, 10% crunchy bread sticks, and 10% my beloved pumpernickel chips. Suppose I’ve got a big bowl of Chex Mix: 1,000 total pieces of food. If I eat three pieces from the bowl, (a) what’s the probability that at least one of them will be a pumpernickel chip? And (b) what’s the probability that either all three will be pumpernickel chips or all three will be my second-favorite—Chex pieces?

- You’re playing draw poker. Here’s how the game works: a poker hand is a combination of five cards; some combinations are better than others; in draw poker, you’re dealt an initial hand, and then, after a round of wagering, you’re given a chance to discard some of your cards (up to three) and draw new ones, hoping to improve your hand; after another round of betting, you see who wins. In this particular hand, you’re initially dealt a 7 of hearts and the 4, 5, 6, and King of spades. This hand is quite weak on its own, but it’s very close to being quite strong, in two ways: it’s close to being a “flush”, which is five cards of the same suit (you have four spades); it’s also close to being a “straight”, which is five cards of consecutive rank (you have four in a row in the 4, 5, 6, and 7). A flush beats a straight, but in this situation that doesn’t matter; based on how the other players acted during the first round of betting, you’re convinced that either the straight or the flush will win the money in the end. The question is, which one should you go for? Should you discard the King, hoping to draw a 3 or an 8 to complete your straight? Or should you discard the 7 of hearts, hoping to draw a spade to complete your flush? What’s the probability for each? You should pick whichever one is higher.[7]

II. Probability and Decision-Making: Value and Utility

The future is uncertain, but we have to make decisions every day that have an effect on our prospects, financial and otherwise. Faced with uncertainty, we do not merely throw up our hands and guess randomly about what to do; instead, we assess the potential risks and benefits of a variety of options, and choose to act in a way that maximizes the probability of a beneficial outcome. Things won’t always turn out for the best, but we have to try to increase the chances that they will. To do so, we use our knowledge—or at least our best estimates—of the probabilities of future events to guide our decisions.

The process of decision-making in the face of uncertainty is most clearly illustrated with examples involving financial decisions. When we make a financial investment, or—what’s effectively though not legally the same thing—a wager, we’re putting money at risk with the hope that it will pay off in a larger sum of money in the future. We need a way of deciding whether such bets are good ones or not. Of course, we can evaluate our financial decisions in hindsight, and deem the winning bets good choices and the losing ones bad choices, but that’s not a fair standard. The question is, knowing what we knew at the time we made our decision, did we make the choice that maximized the probability of a profitable outcome, even if the profit was not guaranteed?

To evaluate the soundness of a wager or investment, then, we need to look not at its worth after the fact—its final value, we might say—but rather at the value we can reasonably expect it to have in the future, based on what we know at the time the decision is made. We’ll call this the expected value. To calculate the expected value of a wager or investment, we must take into consideration (a) the various possible ways in which the future might turn out that are relevant to our bet, (b) the value of our investment in those various circumstances, and (c) the probabilities that these various circumstances will come to pass. The expected value is a weighted average of the values in the different circumstances; it is weighted by the probabilities of each circumstance. Here is how we calculate expected value (EV):

EV = P(O1) x V(O1) + P(O2) x V(O2) + … + P(On) x V(On)

This formula is a sum; each term in the sum is the product of a probability and a value. The terms ‘O1, O2, …, On’ refer to all the possible future outcomes that are relevant to our bet. P(Ox) is the probability that outcome #x will come to pass. V(Ox) is the value of our investment should outcome #x come to pass.

Perhaps the simplest possible scenario we can use to illustrate how this calculation works is the following: you and your roommate are bored, so you decide to play a game; you’ll each put up a dollar, then flip a coin; if it comes up heads, you win all the money; if it comes up tails, she does.[8] What’s the expected value of your $1 bet? First, we need to consider which possible future circumstances are relevant to your bet’s value. Clearly, there are two: the coin comes up heads, and the coin comes up tails. There are two outcomes in our formula: O1 = heads, O2 = tails. The probability of each of these is 1/2. We must also consider the value of your investment in each of these circumstances. If heads comes up, the value is $2—you keep your dollar and snag hers, too. If tails comes up, the value is $0—you look on in horror as she pockets both bills. (Note: value is different from profit. You make a profit of $1 if heads comes up, and you suffer a loss of $1 if tails does—or your profit is -$1. Value is how much money you’re holding at the end.) Plugging the numbers into the formula, we get the expected value:

EV = P(heads) x V(heads) + P(tails) x V(tails) = 1/2 x $2 + 1/2 x $0 = $1

The expected value of your $1 bet is $1. You invested a dollar with the expectation of a dollar in return. This is neither a good nor a bad bet. A good bet is one for which the expected value is greater than the amount invested; a bad bet is one for which it’s less.

This suggests a standard for evaluating financial decisions in the real world: people should look to put their money to work in such a way that the expected value of their investments is as high as possible (and, of course, higher than the invested amount). Suppose I have $1,000 free to invest. One way to put that money to work would be to stick it in a money market account, which is a special kind of savings deposit account one can open with a bank. Such accounts offer a return on your investment in the form of a payment of a certain amount of interest—a percentage of your deposit amount. Interest is typically specified as a yearly rate. So a money market account offering a 1% rate pays me 1% of my deposit amount after a year.[9] Let’s calculate the expected value of an investment of my $1,000 in such an account. We need to consider the possible outcomes that are relevant to my investment. I can only think of two possibilities: at the end of the year, the bank pays me my money; or, at the end of the year, I get stiffed—no money. The calculation looks like this:

EV = P(paid) x V(paid) + P(stiffed) x V(stiffed)

One of the things that makes this kind of investment attractive is that it’s virtually risk-free. Bank deposits of up to $250,000 are insured by the federal government.[10] So even if the bank goes out of business before I withdraw my money, I’ll still get paid in the end.[11] That means P(paid) = 1 and P(stiffed) = 0. Nice. What’s the value when I get paid? It’s the initial $1,000 plus the 1% interest. 1% of $1,000 is $10, so V(paid) = $1,010.

That’s not much of a return, but interest rates are low these days, and it’s not a risky investment. We could increase the expected value if we put our money into something that’s not a sure thing. One option is corporate bonds. For this type of investment, you lend your money to a company for a specified period of time (and they use it to build a factory or something), then you get paid back the principal investment plus some interest.12 Corporate bonds are a riskier investment than bank deposits because they’re no insured by the federal government. If you company goes bankrupt before the date you’re supposed to get paid back, you lose your money. That is, P(paid) in the expected value calculation above is no longer 1; P(stiffed) is somewhere north of 0. What are the relevant probabilities? Well, it depends on the company. There are firms in the “credit rating” business—Moody’s, S&P, Fitch, etc.—that put out reports and classify companies according to how risky they are to loan money to. They assign ratings like ‘AAA’ (or ‘Aaa’, depending on the agency), ‘AA’, ‘BBB’, ‘CC’, and so on. The further into the alphabet you get, the higher the probability you’ll get stiffed. It’s impossible to say exactly what that probability is, of course; the credit rating agencies provide a rough guide, but ultimately it’s up to the individual investor to decide what the risks are and whether they’re worth it.

To determine whether the risks are worth it, we must compare the expected value of an investment in a corporate bond with a baseline, risk-free investment—like our money market account above. Since the probability of getting paid is less than 1, we must have a higher yield than 1% to justify choosing the corporate bond over the safer investment. How much higher? It depends on the company; it depends on how likely it is that we’ll get paid back in the end.

The expected value calculation is simple in these kinds of cases. Even though P(stiffed) is not 0, V(stiffed) is; if we get stiffed, our investment is worth nothing. So when calculating expected value, we can ignore the second term in the sum. All we have to do is multiply P(paid) by V(paid).

Suppose we’re considering investing in a really reliable company; let’s say P(paid) = .99. Doing the math, in order for a corporate bond with this reliable company to be a better bet than a money market account, they’d have offer an interest rate of a little more than 2%. If we consider a less reliable company—say one for which P(paid) = .95—then we’d need a rate of little more than

when George is about to leave on his honeymoon, but he has to go back to the Bailey Building and Loan to prevent such a catastrophe. Anyway, if everybody knows they’ll get their money back even if the bank goes under, such things won’t happen; that’s what the FDIC is for.

Unless, of course, the federal government goes out of business. But in that case, $1,000 is useful maybe as emergency toilet paper; I need canned goods and ammo at that point.

Again, there are all sorts of complications we’re glossing over to keep things simple.

Probably. There are different kinds of bankruptcies and lots of laws governing them; it’s possible for investors to get some money back in probate court. But it’s complicated. One thing’s for sure: our measly $1,000 imaginary investment makes us too small-time to have much of a chance of getting paid during bankruptcy proceedings. 14 Historical data on the probability of default for companies at different ratings by agency are available.

6.3% to make this a better investment. If we go down to a 90% chance of getting paid back, we need a yield of more than 12% to justify that decision.

What does it mean to be a good, rational economic agent? How should a person, generally speaking, invest money? As we mentioned earlier, a plausible rule governing such decisions would be something like this: always choose the investment for which expected value is maximized.

But real people deviate from this rule in their monetary decisions, and it’s not at all clear that they’re irrational to do so. Consider the following choice: (a) we’ll flip a coin, and if it comes up heads, you win $1,000, but if it comes up tails, you win nothing; (b) no coin flip, you just win $499, guaranteed. The expected value of choice (b) is just the guaranteed $499. The value of choice (a) can be easily calculated:

EV = P(heads) x V(heads) + P(tails) x V(tails)

= (.5 x $1,000) + (.5 x $0)

= $500

So according to our principle, it’s always rational to choose (a) over (b): $500 > $499. But in real life, most people who are offered such a choice go with the sure-thing, (b). (If you don’t share that intuition, try upping the stakes—coin flip for $10,000 vs. $4,999 for sure.) Are people who make such a choice behaving irrationally?

Not necessarily. What such examples show is that people take into consideration not merely the value, in dollars, of various choices, but the subjective significance of their outcomes—the degree to which they contribute to the person’s overall well-being. As opposed to ‘value’, we use the term ‘utility’ to refer to such considerations. In real life decisions, what matters is not the expected value of an investment choice, but its expected utility—the degree to which it satisfies a person’s desires, comports with subjective preferences.

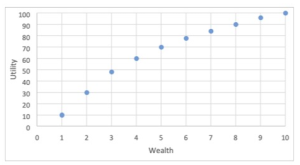

The tendency of people to accept a sure thing over a risky wager, despite a lower expected value, is referred to as risk aversion. This is the consequence of an idea first formalized by the mathematician Daniel Bernoulli in 1738: the diminishing marginal utility of wealth. The basic idea is that as the amount of money one has increases, each addition to one’s fortune becomes less important, from a personal, subjective point of view. An extra $1,000 means very little to Bill Gates; an extra $1,000 for a poor college student would mean quite a lot. The money would add very little utility for Gates, but much more for the college student. Increases in one’s fortune above zero mean more than subsequent increases. Bernoulli’s utility function looked something like this[12]:

Diminishing Marginal Utility of Wealth

This explains the choice of the $499 sure-thing over the coin flip for $1,000. The utility attached to those first $499 is greater than the extra utility of the additional possible $501 dollars one could potentially win, so people opt to lock in the gain. Utility rises quickly at first, but levels out at higher amounts. From Bernoulli’s chart, the utility of the sure-thing is somewhere around 70, while the utility of the full $1,000 is only 30 more—100. Computing the expected utility of the coin-flip wager gives us this result:

EU = P(heads) x U(heads) + P(tails) x U(tails)

= (.5 x 100) + (.5 x 0)

= 50

The utility of 70 for the sure-thing easily beats the expected utility from the wager. It is possible to get people to accept risky bets over sure-things, but one must take into account this diminishing marginal utility. For a person whose personal utility function is like Bernoulli’s, an offer of a mere $300 (where the utility is down closer to 50) would make the decision more difficult. An offer of $200 would cause them to choose the coin flip.

It has long been accepted economic doctrine that rational economic agents act in such a way as to maximize their utility, not their value. It is a matter of some dispute what sort of utility function best captures rational economic agency. Different economic theories assume different versions of ideal rationality for the agents in their models.

Recently, this practice of assuming perfect utility-maximizing rationality of economic agents has been challenged. While it’s true that the economic models generated under such assumptions can provide useful results, as a matter of fact, the behavior of real people (homo sapiens as opposed to “homo economicus”—the idealized economic man of the models) departs in predictable ways from the utility-maximizing ideal. Psychologists—especially Daniel Kahneman and Amos Tversky— have conducted a number of experiments that demonstrate pretty conclusively that people regularly behave in ways that, by the lights of economic theory, are irrational. For example, consider the following two scenarios (go slowly; think about your choices carefully):

You have $1,000. Which would you choose?

Coin flip. Heads, you win another $1,000; tails, you win nothing.

An additional $500 for sure.

You have $2,000. Which would you choose?

Coin flip. Heads you lose $1,000; tails, you lose nothing.

Lose $500 for sure.[13]

According to the Utility Theory of Bernoulli and contemporary economics, the rational agent would choose option (b) in each scenario. Though they start in different places, for each scenario option (a) is just a coin flip between $1,000 and $2,000, while (b) is $1,500 for sure. Because of the diminishing marginal utility of wealth, (b) is the utility-maximizing choice each time. But as a matter of fact, most people choose option (b) only in the first scenario; they choose option (a) in the second. (If you don’t share this intuition, try upping the stakes.) It turns out that most people dislike losing more than they like winning, so the prospect of a guaranteed loss in 2(b) is repugnant. Another example: would you accept a wager on a coin flip, where heads wins you $1,500, but tails loses you $1,000? Most people would not. (Again, if you’re different, try upping the stakes.) And this despite the fact that clearly expected value and utility point to accepting the proposition.

Kahneman and Tversky’s alternative to Utility Theory is called “Prospect Theory”. It accounts for these and many other observed regularities in human economic behavior. For example, people’s willingness to overpay for a very small chance at a very large gain (lottery tickets); also, their willingness to pay a premium to eliminate small risks (insurance); their willingness to take on risk to avoid large losses; and so on.[14]

It’s debatable whether the observed deviations from idealized utility-maximizing behavior are rational or not. The question “What is an ideally rational economic agent?” is not one that we can answer easily. That’s a question for philosophers to grapple with. The question that economists are grappling with is whether, and to what extent, they must incorporate these psychological regularities into their models. Real people are not the utility-maximizers the models say they are. Can we get more reliable economic predictions by taking their actual behavior into account? Behavioral economics is the branch of that discipline that answers this question in the affirmative. It is a rapidly developing field of research.

EXERCISES

- You buy a $1 ticket in a raffle. There are 1,000 tickets sold. Tickets are selected out of one of those big round drums at random. There are 3 prizes: first prize is $500; second prize is $200; third prize is $100. What’s the expected value of your ticket?

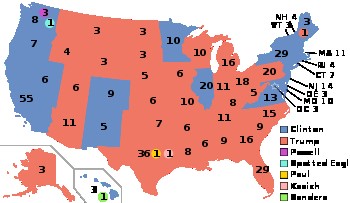

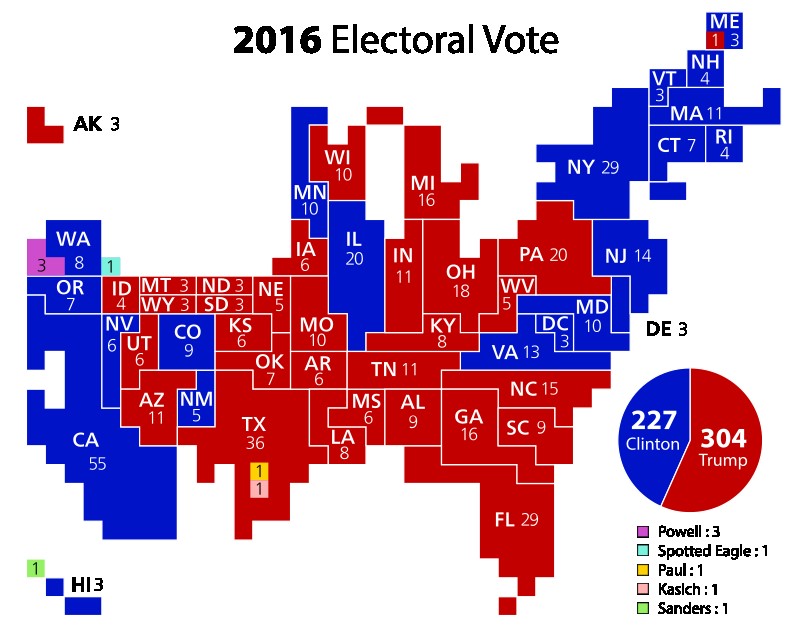

- On the eve of the 2016 U.S. presidential election, the poll-aggregating website 538.com predicted that Donald Trump had a 30% chance of winning. It’s possible to wager on these sorts of things, believe it or not (with bookmakers or in “prediction markets”). On election night, right before 8:00pm EST, the “money line” odds on a Trump victory were +475. That means that a wager of $100 on Trump would earn $475 in profit, for a total final value of $575. Assuming the 538.com crew had the probability of a Trump victory right, what was the expected value of a $100 wager at 8:00pm at the odds listed?

- You’re offered three chances to roll a one with a fair die. You put up $10 and your challenger puts up $10. If you succeed in rolling one even once, you win all the money; if you fail, your challenger gets all the money. Should you accept the challenge? Why or why not?

- You’re considering placing a wager on a horse race. The horse you’re considering is a longshot; the odds are 19 to 1. That means that for every dollar you wager, you’d win $19 in profit (which means $20 total in your pocket afterwards). How probable must it be that the horse will win for this to be a good wager (in the sense that the expected value is greater than the amount bet)?

- I’m looking for a good deal in the junk bond market. These are highly risky corporate bonds; the risk is compensated for with higher yields. Suppose I find a company that I think has a 25% chance of going bankrupt before the bond matures. How high of a yield do I need to be offered to make this a good investment (again, in the sense that the expected value is greater than the price of the investment)?

- For someone with a utility function like that described by Bernoulli (see above), what would their choice be if you offered them the following two options: (a) coin flip, with heads winning $8,000 and tails winning $2,000; (b) $5,000 guaranteed? Explain why they would make that choice, in terms of expected utility. How would increasing the lower prize on the coin-flip option change things, if at all? Suppose we increased it to $3,000. Or $4,000. Explain your answers.

III. Probability and Belief: Bayesian Reasoning

The great Scottish philosopher David Hume, in his An Enquiry Concerning Human Understanding, wrote, “In our reasonings concerning matter of fact, there are all imaginable degrees of assurance, from the highest certainty to the lowest species of moral evidence. A wise man, therefore, proportions his belief to the evidence.” Hume is making a very important point about a kind of reasoning that we engage in every day: the adjustment of beliefs in light of evidence. We believe things with varying degrees of certainty, and as we make observations or learn new things that bear on those beliefs, we make adjustments to our beliefs, becoming more or less certain accordingly. Or, at least, that’s what we ought to do. Hume’s point is an important one because too often people do not adjust their beliefs when confronted with evidence—especially evidence against their cherished opinions. One needn’t look far to see people behaving in this way: the persistence and ubiquity of the beliefs, for example, that vaccines cause autism, or that global warming is a myth, despite overwhelming evidence to the contrary, are a testament to the widespread failure of people to proportion their beliefs to the evidence, to a general lack of “wisdom”, as Hume puts it.

Here we have a reasoning process—adjusting beliefs in light of evidence—which can be done well or badly. We need a way to distinguish good instances of this kind of reasoning from bad ones. We need a logic. As it happens, the tools for constructing such a logic are ready to hand: we can use the probability calculus to evaluate this kind of reasoning.

Our logic will be simple: it will be a formula providing an abstract model of perfectly rational belief-revision. The formula will tell us how to compute a conditional probability. It’s named after the 18th century English reverend who first formulated it: Thomas Bayes. It is called “Bayes’ Law” and reasoning according to its strictures is called “Bayesian reasoning”.

At this point, you will naturally be asking yourself something like this: “What on Earth does a theorem about probability have to do with adjusting beliefs based on evidence?” Excellent question; I’m glad you asked. As Hume mentioned in the quote we started with, our beliefs come with varying degrees of certainty. Here, for example, are three things I believe: (a) 1 + 1 = 2; (b) the earth is approximately 93 million miles from the sun (on average); (c) I am related to Winston Churchill. I’ve listed them in descending order: I’m most confident in (a), least confident in (c). I’m more confident in (a) than (b), since I can figure out that 1 + 1 = 2 on my own, whereas I have to rely on the testimony of others for the Earth-to-Sun distance. Still, that testimony gives me a much stronger belief than does the testimony that is the source of (c). My relation to Churchill is apparently through my maternal grandmother; the details are hazy. Still, she and everybody else in the family always said we were related to him, so I believe it.

“Fine,” you’re thinking, “but what does this have to do with probabilities?” Our degrees of belief in particular claims can vary between two extremes: complete doubt and absolute certainty. We could assign numbers to those states: complete doubt is 0; absolute certainty is 1. Probabilities also vary between 0 and 1! It’s natural to represent degrees of beliefs as probabilities. This is one of the philosophical interpretations of what probabilities really are.[15] It’s the so-called “subjective” interpretation, since degrees of belief are subjective states of mind; we call these “personal probabilities”. Think of rolling a die. The probability that it will come up showing a one is 1/6. One way of understanding what that means is to say that, before the die was thrown, the degree to which you believed the proposition that the die will come up showing one—the amount of confidence you had in that claim—was 1/6. You would’ve had more confidence in the claim that it would come up showing an odd number—a degree of belief of 1/2.

We’re talking about the process of revising our beliefs when we’re confronted with evidence. In terms of probabilities, that means raising or lowering our personal probabilities as warranted by the evidence. Suppose, for example, that I was visiting my grandmother’s hometown and ran into a friend of hers from way back. In the course of the conversation, I mention how grandma was related to Churchill. “That’s funny,” says the friend, “your grandmother always told me she was related to Mussolini.” I’ve just received some evidence that bears on my belief that I’m related to Churchill. I never heard this Mussolini claim before. I’m starting to suspect that my grandmother had an odd eccentricity: she enjoyed telling people that she was related to famous leaders during World War II. (I wonder if she ever claimed to be related to Stalin. FDR? Let’s pray Hitler was never invoked. And Hirohito would strain credulity; my grandma was clearly not Japanese.) In response to this evidence, if I’m being rational, I would revise my belief that I’m related to Winston Churchill: I would lower my personal probability for that belief; I would believe it less strongly. If, on the other hand, my visit to my grandma’s hometown produced a different bit of evidence— let’s say a relative had done the relevant research and produced a family genealogy tracing the relation to Churchill—then I would revise my belief in the other direction, increasing my personal probability, believing it more strongly.

Since belief-revision in this sense just involves adjusting probabilities, our model for how it works is just a means of calculating the relevant probabilities. That’s why our logic can take the form of an equation. We want to know how strongly we should believe something, given some evidence about it. That’s a conditional probability. Let ‘H’ stand for a generic hypothesis—something we believe to some degree or other; let ‘E’ stand for some evidence we discover. What we want to know is how to calculate P(H | E)—the probability of H given E, how strongly we should believe H in light of the discovery of E.

Bayes’ Law tells us how to perform this calculation. Here’s one version of the equation:[16]

P(H) x P(E | H)

P(H | E) = —————————-

P(E)

This equation has some nice features. First of all, the presence of ‘P(H)’ in the numerator is intuitive. This is often referred to as the “prior probability” (or “prior” for short); it’s the degree to which the hypothesis was believed prior to the discovery of the evidence. It makes sense that this would be part of the calculation: how strongly I believe in something now ought to be (at least in part) a function of how strongly I used to believe it. Second, ‘P(E | H)’ is a useful item to have in the calculation, since it’s often a probability that can be known. Notice, this is the reverse of the conditional probability we’re trying to calculate: it’s the probability of the evidence, assuming that the hypothesis is true (it may not be, but we assume it is, as they say, “for the sake of argument”). Consider an example: as you may know, being sick in the morning can be a sign of pregnancy; if this were happening to you, the hypothesis you’d be entertaining would be that you’re pregnant, and the evidence would be vomiting in the morning. The conditional probability you’re interested in is P(pregnant | vomiting)—that is, the probability that you’re pregnant, given that you’ve been throwing up in the morning. Part of using Bayes’ Law to make this calculation involves the reverse of that conditional probability: P(vomiting | pregnant)—the probability that you’d be throwing up in the morning, assuming (for the sake of argument) that you are in fact pregnant. And that’s something we can just look up; studies have been done. It turns out that about 60% of women experience have morning sickness (to the point of throwing up) during the first trimester of pregnancy. There are lots of facts like this available. Did you know that a craving for ice is a potential sign of anemia? Apparently it is: 44% of anemia patients have the desire to eat ice. Similar examples are not hard to find. It’s worth noting, in addition, that sometimes the reverse probability in question—P(E | H)—is 1. In the case of a prediction made by a scientific hypothesis, this is so. Isaac Newton’s theory of universal gravitation, for example, predicts that objects dropped from the same height will take the same amount of time to reach the ground, regardless of their weights (provided that air resistance is not a factor). This prediction is just a mathematical result of the equation governing gravitational attraction. So if H is Newton’s theory and E is a bowling ball and a feather taking the same amount of time to fall, then P(E | H) = 1; if Newton’s theory is true, then it’s a mathematical certainty that the evidence will be observed.[17]

So this version of Bayes’ Law is attractive because of both probabilities in the numerator: P(H), the prior probability, is natural, since the adjusted degree of belief ought to depend on the prior degree of belief; and P(E | H) is useful, since it’s a probability that we can often know precisely. The formula is also nice in that it comports well with our intuitions about how belief-revision ought to work. It does this in three ways.

First, we know that implausible hypotheses are hard to get people to believe; as Carl Sagan once put it, “Extraordinary claims require extraordinary evidence.” Putting this in terms of personal probabilities, an implausible hypothesis—and extraordinary claim—is just one with a low prior: P(H) is a small fraction. Consider an example. In the immediate aftermath of the 2358 U.S. presidential election, some people claim that the election was rigged (possibly by Mars) in favor of General Krang by way of a massive computer hacking scheme that manipulated the vote totals in key precincts.22 You have very little confidence in this hypothesis— and give it an extremely low prior probability—for lots of reasons, but two in particular: (a) Voting machines in individual precincts are not networked together, so any hacking scheme would have to be carried out on a machine-by-machine basis across hundreds—if not thousands—of precincts, an operation of almost impossible complexity; (b) An organization with practically unlimited financial resources and the strongest possible motivation for uncovering such a scheme—namely, the anti-Krang campaign—looked at the data and concluded there was nothing fishy going on. But none of this stops wishful-thinking anti-Krang voters from digging for evidence that in fact the fix had been in for Krang. When people approach you with this kind of evidence—look at these suspiciously high turnout numbers from a handful of precincts in rural Wisconsin!— your degree of belief in the hypothesis—that the Martians had hacked the election—barely budges. This is proper; again, extraordinary claims require extraordinary evidence, and future you isn’t seeing it. This intuitive fact about how belief-revision is supposed to work is borne out by the equation for Bayes’ Law. Implausible hypotheses have a low prior—P(H) is a small fraction. It’s hard to increase our degree of belief in such propositions—P(H | E) doesn’t easily rise—simply because we’re multiplying by a low fraction in the numerator when calculating the new probability.

The math mirrors the actual mechanics of belief-revision in two more ways. Here’s a truism: the more strongly predictive piece of evidence is for a given hypothesis, the more it supports that hypothesis when we observe it. We saw above that women who are pregnant experience morning sickness about 60% of the time; also, patients suffering from anemia crave ice (for some reason) 44% of the time. In other words, throwing up in the morning is more strongly predictive of pregnancy than ice-craving is of anemia. Morning sickness would increase belief in the hypothesis of pregnancy more than ice-craving would increase belief in anemia. Again, this banal observation is borne out in the equation for Bayes’ Law. When we’re calculating how strongly we should believe in a hypothesis in light of evidence—P(H | E)—we always multiply in the numerator by the reverse conditional probability—P(E | H)—the probability that you’d observe the evidence, assuming the hypothesis is true. For pregnancy/sickness, this means multiplying by .6; for anemia/ice-craving, we multiply by .44. In the former case, we’re multiplying by a higher number, so our degree of belief increases more.

A third intuitive fact about belief-revision that our equation correctly captures is this: surprising evidence provides strong confirmation of a hypothesis. Consider the example of Albert Einstein’s general theory of relativity, which provided a new way of understanding gravity: the presence of massive objects in a particular region of space affects the geometry of space itself, causing it to be curved in that vicinity. Einstein’s theory has a number of surprising consequences, one of which is that because space is warped around massive objects, light will not travel in a straight line in those places.[18] In this example, H is Einstein’s general theory of relativity, and E is an observation of light following a curvy path. When Einstein first put forward his theory in 1915, it was met with incredulity by the scientific community, not least because of this astonishing prediction. Light bending? Crazy! And yet, four years later, Arthur Eddington, an English astronomer, devised and executed an experiment in which just such an effect was observed. He took pictures of stars in the night sky, then kept his camera trained on the same spot and took another picture during an eclipse of the sun (the only time the stars would also be visible during the day). The new picture showed the stars in slightly different positions, because during the eclipse, their light had to pass near the sun, whose mass caused their path to be deflected slightly, just as Einstein predicted. As soon as Eddington made his results public, newspapers around the world announced the confirmation of general relativity and Einstein became a star. As we said, surprising results provide strong confirmation; hardly anything could be more surprising that light bending. We can put this in terms of personal probabilities. Bending light was the evidence, so P(E) represents the degree of belief someone would have in the proposition that light will travel a curvy path. This was a very low number before Eddington’s experiments. When we use is to calculate how strongly we should believe in general relativity given the evidence that light in fact bends—P(H | E)—it’s in the denominator of our equation. Dividing by a very small fraction means multiplying by its reciprocal, which is a very large number. This makes P(H | E) go up dramatically. Again, the math mirrors actual reasoning practice.

So, our initial formulation of Bayes’ Law has a number of attractive features; it comports well with our intuitions about how belief-revision actually works. But it is not the version of Bayes’ Law that we will settle on the make actual calculations. Instead, we will use a version that replaces the denominator—P(E)—with something else. This is because that term is a bit tricky. It’s the prior probability of the evidence. That’s another subjective state—how strongly you believed the evidence would be observed prior to its actual observation, or something like that. Subjectivity isn’t a bad thing in this context; we’re trying to figure out how to adjust subjective states (degrees of belief), after all. But the more of it we can remove from the calculation, the more reliable our results. As we discussed, the subjective prior probability for the hypothesis in question—P(H)— belongs in our equation: how strongly we believe in something now ought to be a function of how strongly we used to believe in it. The other item in the numerator—P(E | H)—is most welcome, since it’s something we can often just look up—an objective fact. But P(E) is problematic. It makes sense in the case of light bending and general relativity. But consider the example where I run into my grandma’s old acquaintance and she tells me about her claims to be related to Mussolini. What was my prior for that? It’s not clear there even was one; the possibility probably never even occurred to me. I’d like to get rid of the present denominator and replace it with the kinds of terms I like—those in the numerator.

I can do this rather easily. To see how, it will be helpful to consider the fact that when we’re evaluating a hypothesis in light of some evidence, there are often alternative hypotheses that it’s competing with. Suppose I’ve got a funny looking rash on my skin; this is the evidence. I want to know what’s causing it. I may come up with a number of possible explanations. It’s winter, so maybe it’s just dry skin; that’s one hypothesis. Call it ‘H1’. Another possibility: we’ve just started using a new laundry detergent at my house; maybe I’m having a reaction. H2 = detergent. Maybe it’s more serious, though. I get on the Google and start searching. H3 = psoriasis (a kind of skin disease). Then my hypochondria gets out of control, and I get really scared: H4 = leprosy. That’s all I can think of, but it may not be any of those: H5 = some other cause.

I’ve got five possible explanations for my rash—five hypotheses I might believe in to some degree in light of the evidence. Notice that the list is exhaustive: since I added H5 (something else), one of the five hypotheses will explain the rash. Since this is the case, we can say with certainty that I have a rash and it’s caused by the cold, or I have a rash and it’s caused by the detergent, or I have a rash and it’s caused by psoriasis, or I have a rash and it’s caused by leprosy, or I have a rash and it’s caused by something else. Generally speaking, when a list of hypotheses is exhaustive of the possibilities, the following is a truth of logic:

E ≡ (E • H1) ∨ (E • H2) ∨ … ∨ (E • Hn)

For each of the conjunctions, it doesn’t matter what order you put the conjuncts, so this true, too:

E ≡ (H1 • E) ∨ (H2 • E) ∨ … ∨ (Hn • E)

Remember, we’re trying to replace P(E) in the denominator of our formula. Well, if E is equivalent to that long disjunction, then P(E) is equal to the probability of the disjunction:

P(E) = P[(H1 • E) (H2 • E) … (Hn • E)]

We’re calculating a disjunctive probability. If we assume that the hypotheses are mutually exclusive (only one of them can be true), then we can use the Simple Addition Rule:[19]

P(E) = P(H1 • E) + P(H2 • E) + … + P(Hn • E)

Each item in the sum is a conjunctive probability calculation, for which we can use the General Product Rule:

P(E) = P(H1) x P(E | H1) + P(H2) x P(E | H2) + … + P(Hn) x P(E | Hn)

And look what we have there: each item in the sum is now a product of exactly the two types of terms that I like—a prior probability for a hypothesis, and the reverse conditional probability of the evidence assuming the hypothesis is true (the thing I can often just look up). I didn’t like my old denominator, but it’s equivalent to something I love. So I’ll replace it. This is our final version of Bayes’ Law:

| P(Hk) x P(E | Hk) | ||

| P(Hk | E) = | —————————————————– | [1 ≤ k ≤ n][20] |

| P(H1) x P(E | H1) + P(H2) x P(E | H2) + … + P(Hn) x P(E | Hn) |

Let’s see how this works in practice. Consider the following scenario:

Your mom does the grocery shopping at your house. She goes to two stores: Fairsley Foods and Gibbons’ Market. Gibbons’ in closer to home, so she goes there more often—80% of the time. Fairsley sometimes has great deals, though, so she drives the extra distance and shops there 20% of the time.

You can’t stand Fairsley. First of all, they’ve got these annoying commercials with the crazy owner shouting into the camera and acting like a fool. Second, you got lost in there once when you were a little kid and you’ve still got emotional scars. Finally, their produce section is terrible: in particular, their peaches—your favorite fruit—are often mealy and bland, practically inedible. In fact, you’re so obsessed with good peaches that you made a study of it, collecting samples over a period of time from both stores, tasting and recording your data. It turns out that peaches from Fairsley are bad 40% of the time, while those from Gibbons’ are only bad 20% of the time. (Peaches are a fickle fruit; you’ve got to expect some bad ones no matter how much care you take.)

Anyway, one fine day you walk into the kitchen and notice a heaping mound of peaches in the fruit basket; mom apparently just went shopping. Licking your lips, you grab a peach and take a bite. Ugh! Mealy, bland—horrible. “Stupid Fairsley,” you mutter as you spit out the fruit. Question: is your belief that the peach came from Fairsley rational? How strongly should you believe that it came from that store?

This is the kind of question Bayes’ Law can help us answer. It’s asking us about how strongly we should believe in something; that’s just calculating a (conditional) probability. We want to know how strongly we should believe that the peach came from Fairsley; that’s our hypothesis. Let’s call it ‘F’. These types of calculations are always of conditional probabilities: we want the probability of the hypothesis given the evidence. In this case, the evidence is that the peach was bad; let’s call that ‘B’. So the probability we want to calculate is P(F | B)—the probability that the peach came from Fairsley given that it’s bad.

At this point, we reference Bayes’ Law and plug things into the formula. In the numerator, we want the prior probability for our hypothesis, and the reverse conditional probability of the evidence assuming the hypothesis is true:

P(F) x P(B | F)

P(F | B) = —————————————-

In the denominator, we need a sum, with each term in the sum having exactly the same form as our numerator: a prior probability for a hypothesis multiplied by the reverse conditional probability. The sum has to have one such term for each of our possible hypotheses. In our scenario, there are only two: that the fruit came from Fairsley, or that it came from Gibbons’. Let’s call the second hypothesis ‘G’. Our calculation looks like this:

P(F) x P(B | F)

P(F | B) = ——————————————-

P(F) x P(F | B) + P(G) x P(F | G)

Now we just have to find concrete numbers for these various probabilities in our little story. First, P(F) is the prior probability for the peach coming from Fairsley—that is, the probability that you would’ve assigned to it coming from Fairsley prior to discovering the evidence that it was bad— before you took a bite. Well, we know mom’s shopping habits: 80% of the time she goes to Gibbons’; 20% of the time she goes to Fairsley. So a random piece of food—our peach, for example—has a 20% probability of coming from Fairsley. P(F) = .2. And for that matter, the peach has an 80% probability of coming from Gibbons’, so the prior probability for that hypothesis— P(G)—is .8. What about P(B | F)? That’s the conditional probability that a peach will be bad assuming it came from Fairsley. We know that! You did a systematic study and concluded that 40% of Fairsley’s peaches are bad; P(B | F) = .4. Moreover, your study showed that 20% of peaches from Gibbons’ were bad, so P(G | F) = .2. We can now plug in the numbers and do the calculation:

P(F | B) = (.2 x .4) / ((.2 x .4) + (.8 x .2)) = .08/(.08 + .16) = 1/3

As a matter of fact, the probability that the bad peach you tasted came from Fairsley—the conclusion to which you jumped as soon as you took a bite—is only 1/3. It’s twice as likely that the peach came from Gibbons’. Your belief is not rational. Despite the fact that Fairsley peaches are bad at twice the rate of Gibbons’, it’s far more likely that your peach came from Gibbons’, mainly because your mom does so much more of her shopping there.

So here we have an instance of Bayes’ Law performing the function of a logic—providing a method for distinguishing good from bad reasoning. Our little story, it turns out, depicted an instance of the latter, and Bayes’ Law showed that the reasoning was bad by providing a standard against which to measure it. Bayes’ Law, on this interpretation, is a model of perfectly rational belief-revision. Of course many real-life examples of that kind of reasoning can’t be subjected to the kind of rigorous analysis that the (made up) numbers in our scenario allowed. When we’re actually adjusting our beliefs in light of evidence, we often lack precise numbers; we don’t walk around with a calculator and an index card with Bayes’ Law on it, crunching the numbers every time we learn new things. Nevertheless, our actual practices ought to be informed by Bayesian principles; they ought to approximate the kind of rigorous process exemplified by the formula. We should keep in mind the need to be open to adjusting our prior convictions, the fact that alternative possibilities exist and ought to be taken into consideration, the significance of probability and uncertainty to our deliberations about what to believe and how strongly to believe it. Again, Hume: the wise person proportions belief according to the evidence.

EXERCISES

- Women are twice as likely to suffer from anxiety disorders as men: 8% to 4%. They’re also more likely to attend college: these days, it’s about a 60/40 ratio of women to men. (Are these two phenomena related? That’s a question for another time.) If a random person is selected from my logic class, and that person suffers from an anxiety disorder, what’s the probability that it’s a woman?