37 Principles for Using AI in the Classroom and How to Acknowledge It

Joel Gladd

This chapter offers a practical guide to navigating AI in both your college courses and your future career. Whether you’re excited about AI, skeptical of it, or somewhere in between, you’ll need to understand how these tools fit into your academic and professional life.

In this chapter, we’ll cover:

- How AI is changing different career fields, from healthcare to automotive repair

- The different ways you might work with AI (as a “centaur,” “cyborg,” or even a thoughtful “resister”)

- How to follow your college’s AI policies while still building valuable skills

- Clear guidelines for properly citing and acknowledging AI use in your coursework

By the end, you’ll have a clearer picture of how to approach AI tools thoughtfully.

Why do I need to understand generative AI?

College is about learning how to think and work in ways that last. That means picking up durable skills, habits of mind and practice that hold up when the situation changes. And the situation is changing. Generative AI (GenAI) and machine learning aren’t fringe tools anymore. They’re quietly, and sometimes not so quietly, reshaping how people work.

By May 2024, Microsoft reported that three out of four knowledge workers were using GenAI. A few months later, another survey put that number even higher. People said it helped them focus, saved them time, and made their work feel more creative. Some even said it made work enjoyable again.

In fields like business, marketing, and computer science, GenAI is already part of the daily routine. It helps teams draft content, plan strategy, debug code, analyze feedback, and write messages that sound like they weren’t written by a machine. In healthcare, it’s enhancing diagnostic images, automating paperwork, assisting with treatment plans, and even supporting drug development. And in the trades (yes, even in a repair shop) AI can now interpret sensor data, suggest likely fixes, manage inventory, and simulate training scenarios. It’s also helping mechanics explain complex issues in plain language and estimate repair times more accurately.

Across all of these fields, the pattern is the same. AI steps in where there’s complexity and repetition, where decisions depend on patterns, and where communication needs to be fast and clear. The tools may differ, but the shift is real. And wherever you’re headed (clinic, shop floor, office, or something else entirely) it’s worth learning how AI is already shaping the work.

This chapter isn’t going to hype the latest tech or push a particular view. We’re not interested in convincing you that AI is good or bad, essential or optional. The goal is simpler: to offer a clearer picture of how AI is being used in the workplaces and in higher ed, and to surface the questions that matter as this technology becomes more common. From there, you’ll need to decide what kind of relationship you want to have with it.

Principles for Using AI in the Workplace

Before discussing how to think about GenAI in college, it will help to briefly suggest some strategies for using AI tools effectively in your future career, since you’re preparing precisely for that situation (and many of you are working right now). Below we offer a few straightforward principles for using these tools confidently and responsibly. Note that this chapter assumes you have some familiarity with how LLMs work; if you need to review the limitations and risks associated with GenAI, refer back to that chapter.

Professor Ethan Mollick, who studies how people work with AI in higher education and the workplace, suggests two key principles in his book “Co-Intelligence” that can help you get started:

Principle 1: Invite AI to the Table

AI can often be treated as a crucial resource in your professional toolkit. It’s often like having a really smart assistant. The trick is to get in the habit of asking yourself, “Could AI help me with this task?” The sooner you start practicing with these tools, the more natural they’ll feel to use as they get more advanced.

Principle 2: Be the Human in the Loop

Inviting AI to the table must be coupled with critical awareness. While AI is your assistant, you need to provide oversight and steering. Unlike a calculator that just gives you math answers, successful and ethical use of AI often requires your judgment and oversight. Depending on the task, you will frequently need to:

- check if its information is accurate (accuracy and hallucinations);

- make sure what it suggests is ethical;

- use your own expertise to decide if its suggestions or outputs make sense (relevance).

Of course, this is also important while you’re in college. You need to build up your own knowledge and skills first. AI should help you apply what you know, not replace your learning. For example, if you’re studying automotive repair, you need to understand how engines work before using AI to help diagnose problems. Or if you’re in healthcare, you need to understand basic medical concepts before using AI to help with patient care. More on that below.

Centaurs, Cyborgs, and Resisters: Understanding Your AI Style

How you use AI may depend on your comfort level. Some people blend AI seamlessly into their work, others prefer a clear boundary between human-created and AI-generated content, others may take a more antagonistic stance towards these tools. Mollick uses the metaphors of centaurs and cyborgs to describe these approaches. We’re adding the third category, resisters.

Centaurs

A centaur’s approach has clear lines between human and machine tasks, like the mythical centaur with its distinct human upper body and horse lower body. Centaurs divide tasks strategically: the person handles what they’re best at while AI manages other parts. Here’s Mollick’s example: you might use your expertise in statistics to choose the best model for analyzing your data but then ask AI to generate a variety of interactive graphs. AI becomes, for a centaur, a tool for specific tasks within their workflow.

Cyborgs

Cyborgs deeply integrate their work with AI. They don’t just delegate tasks, they blend their efforts with AI, constantly moving back and forth between human and machine. A cyborg approach might look like writing part of a paragraph, asking AI to suggest how to complete it, and revising the AI’s suggestion to match your style. [Warning: Cyborgs may be more likely to violate academic integrity so be aware of your instructor’s AI policy. See below for more guidance.]

Resisters: The Diogenes Approach

Mollick does not suggest this third option, but we find that it’s important to recognize that some students and professionals feel deeply uncomfortable with even the centaur approach, and our institution and faculty will support this preference as well. Not everyone will embrace GenAI. Some may prefer to actively resist its influence, raising critical awareness about its limitations and risks. Like the ancient Greek philosopher Diogenes, who made challenging cultural norms his life’s work, Resisters might focus on warning others about AI’s potential downsides and advocating for caution in its use. Of course, those taking this stance should understand the tool as well as centaurs and cyborgs. In fact, Resisters may need to study these tools even more deliberately.

It’s not practical to identify always as a cyborg, centaur, or resister. These are styles of interacting with an emerging technology, not identities. The most sophisticated cyborgs will occasionally become centaurs, sometimes even resisters when the situation calls for it. Likewise, someone who feels more attracted to the resister mode will have to grok what it means to be a cyborg or centaur if they intend to offer critical guidance to others.

Principles for Using AI in the Classroom

Educational environments foster durable skills that prepare you for workplace and lifelong success. However, there is a key difference between these environments: as you’re learning certain skills, instructors need to be able to assess the choices you’re making, often under challenging circumstances, to offer guidance about how to succeed.

This means instructors must be able to see the labor, that is, the choices a student made in order to figure out how to respond to a challenge. This usually requires effort, what some like to call “friction,” and it’s often uncomfortable at first. It also takes time. GenAI can often reduce that friction and it feels so easy to outsource your thinking and time (the fancy term is “cognitive offloading”).

Why instructors want to see your work

It’s true, to a certain extent, that computer programmers can program with words and increasingly rely on higher-order thinking rather than just typing out routine functions again and again (see: Cursor, Replit, and other software that can build entire apps and websites with simple prompts). Many operations are becoming higher-order. But accessing those higher-order ways of thinking (prompting with models and concepts in mind) is what you need to acquire proficiency in first. It’s why you’re in college. Without those tools and frameworks, you’ll be as replaceable as another worker who can type things into a chatbot. But with those tools and the comfort of working with them in challenging environments, you will better unlock the potential of AI. It’s hard to overemphasize how important this basic concept is: relying too heavily on AI during parts of your college workflow and learning can lead to your own “deskilling” through cognitive offloading. But using these tools in certain parts of your workflow can also boost learning.

What it takes to comprehend (and not just vaguely recognize) the concepts and models taught in each course often requires wrestling with challenges unassisted by AI. On the other hand, sometimes—and at certain parts of your course workflow—assistance can be incredibly effective. Your instructor may ask you to practice the rhetorical appeals with a custom AI chatbot, for example, before demonstrating your proficiency by drafting an essay unassisted. It’s increasingly common for higher ed instructors to build AI assistance within their course expectations but also expect unassisted work at certain points. It’s your job as a student to remain aware of their course and assignment expectations regarding AI. If you’re not sure, ask.

Higher education is beginning to adjust to this new world in which chatbots can help students at any moment. These tools may help reduce the amount of busy work you feel in the classroom; no one enjoys struggling with tasks they find unenjoyable or meaningless. At the same time, you will be expected to demonstrate what choices you’ve made in order to solve certain challenges, that will sometimes take work and struggle, and course policies and sanctions are there to provide guardrails to ensure you’re prepared for greater challenges ahead.

Important note about AI detectors in college and the workplace

Keep in mind that many companies and institutions are now using AI detectors to filter resumes, cover letters, and grant applications. In college, your instructor may have a very restrictive AI policy and use detectors (in ways that protect your privacy) to determine how much work is likely to have been machine-generated. You should be should be aware of your instructor’s (and potential employer’s) expectations. See below for more about CWI’s AI Syllabus Policies as well as how to acknowledge when GenAI has impacted your work.

Understanding AI Syllabus Policies

This final section offers guidance on how to understand AI course policies at CWI. There are three options that your instructors choose from: 1) most restrictive, 2) moderately restrictive, and 3) least restrictive. In each part below, you will find the official language followed by some guidance on how to interpret what’s allowed and what’s prohibited. Note that any use of GenAI that impacts a submission must be accompanied by an acknowledgement statement.

MOST RESTRICTIVE POLICY

Aligned with my commitment to academic integrity and teaching focus of creating original, independent work, the use of generative artificial intelligence (AI) tools, including but not limited to ChatGPT, DALL-E, and similar platforms, to develop and submit work as your own is prohibited in this course. Using AI for assignments constitutes academic dishonesty, equitable to cheating and plagiarism, and will be met with sanctions consistent with any other Academic Integrity violation.

What this allows:

- Since the language focuses on generative AI such as ChatGPT and other Large Language Models (LLMs), this does not restrict using other forms of machine learning, such as transcription tools that help with accessibility, or basic tools such as grammar and spell-check. Note that some grammar tools, such as Grammarly, have generative AI options (Grammarly Pro), and the GenAI options to paraphrase or revise writing would not be allowed under this policy.

What this prohibits at the course-level:

- For longer writing tasks, outlining may be prohibited but ask for clarification from the instructor.

- Using AI to draft responses (written, math-based, programming, etc.) to assignments is prohibited.

- Using AI to revise or alter responses is likely prohibited.

What may be allowed (but you should ask):

- It depends on what your instructor means by “develop.” Some forms of brainstorming may or may not be allowed, but it would also be impossible to enforce a policy that prohibits any brainstorming with generative AI.

MODERATELY RESTRICTIVE POLICY

Aligned with my commitment to academic integrity and the ethical use of technology, this course allows AI tools like ChatGPT, DALL-E, and similar platforms for specific tasks such as brainstorming, idea refinement, and grammar checks. Using AI to write drafts or complete assignments is not permitted, and any use of AI must be cited, including the tool used, access date, and query. It is the expectation that in all uses of AI, students critically evaluate the information for accuracy and bias while respecting privacy and copyright laws.

What this allows:

- Any from the category above (Most Restrictive) is allowed.

- Brainstorming and outlining is allowed or even encouraged.

What this prohibits at the course-level:

- Drafts you intend to submit to the course (written, verbal, math-based, etc.) cannot be generated by AI.

- Any other use of generative AI to help with submitted coursework must be acknowledged and explained.

What may be allowed (but you should ask):

- Your instructor may allow generative AI for improving certain aspects of a completed draft, such as revising topic sentences, etc. Ask before doing this and acknowledge AI use.

-

- Since this applies at the course-level, your instructor may allow or even ask you to use AI for certain tasks.

LEAST RESTRICTIVE POLICY

Aligned with my commitment to academic integrity, creativity, and ethical use of technology, AI tools like ChatGPT, DALL-E, and similar platforms to enhance learning are encouraged as a supplementary resource and not a replacement for personal insight or analysis. Any use of AI must be cited, including the tool used, access date, and query. I expect that in all uses of AI, students critically evaluate the information for accuracy and bias while respecting privacy and copyright laws.

What this allows:

- Anything from the allowed categories above (Most Restrictive and Moderately Restrictive).

- Students can submit work (such as essay drafts or code snippets) that has been assisted by GenAI.

What this prohibits at the course-level:

- You cannot use GenAI to assist with submitted coursework unless it is acknowledged and explained.

- Since this applies at the course-level, your instructor may ask you to not use AI for certain tasks.

Acknowledging and Citing Generative AI (GenAI) in Academic Work

This final section offers guidance on how to acknowledge and cite GenAI when it impacts something you submit in a course. AI Acknowledgement Statements are increasingly an expectation in many courses and professional environments. At CWI, it’s essential to include these statements in order to comply with academic integrity.

Monash University provides helpful recommendations for how to acknowledge when and how you’ve used generated material as part of an assignment or project. If you decide to use generative artificial intelligence such as ChatGPT for an assignment, it’s a best practice to include a statement that does the following:

- Provides a written acknowledgment of the use of generative artificial intelligence.

- Specifies which technology was used.

- Includes explicit descriptions of how the information was generated.

- Identifies the prompts used.

- Explains how the output was used in your work.

The format Monash University provides is also helpful. Students can include this information either in a cover letter or in an appendix to the submitted work. Your instructor may provide more guidance on where to include the acknowledgement statement.

Template

I acknowledge the use of [insert AI system(s) and link] to [specific use of generative artificial intelligence]. The prompts used include [list of prompts]. The output from these prompts was used to [explain use].

Example

I acknowledge the use of [1] ChatGPT [2] (https://chat.openai.com/) [3] to refine the academic language and accuracy of my own work. On 4 January 2023 I submitted my entire essay (link to google document here) with the instruction to “Improve the academic tone and accuracy of language, including grammatical structures, punctuation and vocabulary”. [4] The output (here) [5] was then modified further to better represent my own tone and style of writing.

Academic style guides such as APA already include guidelines for including appendices after essays and reports. Review Purdue Owl’s entry on Footnotes and Appendices for help.

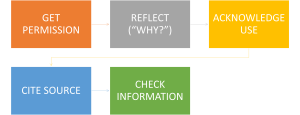

A Checklist for Acknowledging Generative A.I. (GenAI) Tools

Liza Long developed the following checklist to provide guidance on using and acknowledging AI in a course:

- Check with your instructor to make sure you have permission to use these tools.

- Reflect on how and why you want to use generative artificial intelligence in your work. If the answer is “to save time” or “so I don’t have to do the work myself,” think about why you are in college in the first place. What skills are you supposed to practice through this assignment? Will using generative artificial intelligence really save you time in the long run if you don’t have the opportunity to learn and practice these skills?

- If you decide to use generative artificial intelligence, acknowledge your use, either in an appendix or a cover letter.

- Always check the information provided by a generative artificial intelligence tool against a trusted source. Be especially careful of any sources that generative artificial intelligence provides.

References

Monash University. (n.d.). Acknowledging the use of generative artificial intelligence. https://www.monash.edu/learnhq/build-digital-capabilities/create-online/acknowledging-the-use-of-generative-artificial-intelligence