7 How Do Large Language Models (LLMs) like ChatGPT Work?

Joel Gladd

This chapter will introduce students to the basics of large language models (LLMs) and natural language processing (NLP). It’s written for someone who has no familiarity with machine learning. By the end of this chapter, students will learn:

- the difference between human-centered writing and machine-generated text;

- how Natural Language Processing (NLP) works, including tokenization and embedding;

- the different ways Large Language Model (LLMs) become aligned, including OpenAI’s RLHF and Anthropic’s Constitutional approach;

- the limitations and risks associated with LLMs, especially bias, censorship, and hallucinations.

Natural Language Processing, Tokenization, and Embedding

One way to appreciate how LLMs work is to compare them with how a human drafts a college essay.

Human-Centered Model of Writing

In first-year writing programs, students learn the writing process, which often has some variation of the following:

- Free write and brainstorm about a topic.

- Research and take notes.

- Analyze and synthesize research and personal observations.

- Draft a coherent essay based on the notes.

- Get feedback.

- Revise and copy-edit.

- Publish/submit the draft!

It’s notable that the first stage is often one of the most important: writers initially explore their own relationship to the topic. When doing so, they draw on prior experiences and beliefs. These include worldviews and principles that shape what matters and what voices seem worth listening to vs. others.

Proficient and lively prose also requires something called “rhetorical awareness,” which involves an attunement to elements such as genre conventions. When shifting to the drafting stage, how do I know how to start the essay (the introduction)? What comes next? Where do I insert the research I found? How do I interweave my personal experiences and beliefs? How do I tailor my writing to the needs of my audience? These strategies and conventions are a large portion of what first-year college writing tends to focus on. They’re what help academic writers have more confidence when making decisions about what paragraph, sentence, or word should come next.

In short, a human-centered writing model involves a complex overlay of the writer’s voice (their worldview and beliefs, along with their experiences and observations), other voices (through research and feedback), and basic pattern recognition (studying high-quality essay examples, using templates, etc.). It’s highly interactive and remains “social” throughout.

Machine Model of Writing

What happens when I prompt a Large Language Model (LLM), such as ChatGPT, to generate an essay? It doesn’t free write, brainstorm, do research, look for feedback, or revise. Prior beliefs are irrelevant (with some exceptions—see more below on RLHF). It doesn’t have a worldview. It has no experience. Instead, something very different happens to generate the output.

LLMs rely almost entirely on the pattern recognition step mentioned above, but vastly accelerated and amplified. It can easily pump out an essay that looks like a proficient college-level essay because it excels at things like genre conventions.

How does it do this?

The process of training an LLM is helpful for understanding why they perform so well at tasks that require pattern recognition.

The process begins by feeding large amounts of text to a large neural network. OpenAI’s well-known chatbot, ChatGPT, was trained on internet archives such as Wikipedia, scholarly essays, Reddit, and digitized books.

The process of translating human language found in these archives into code that machines can analyze and manipulate is called Natural Language Processing (NLP). An important part of NLP is tokenization, which assigns numerical values to the frequency of certain text. Here’s a video offers an excellent introduction to tokenization:

https://youtube.com/watch?v=f5YJA5mQD5c%3Flist%3DPLOU2XLYxmsII9mzQ-Xxug4l2o04JBrkLV

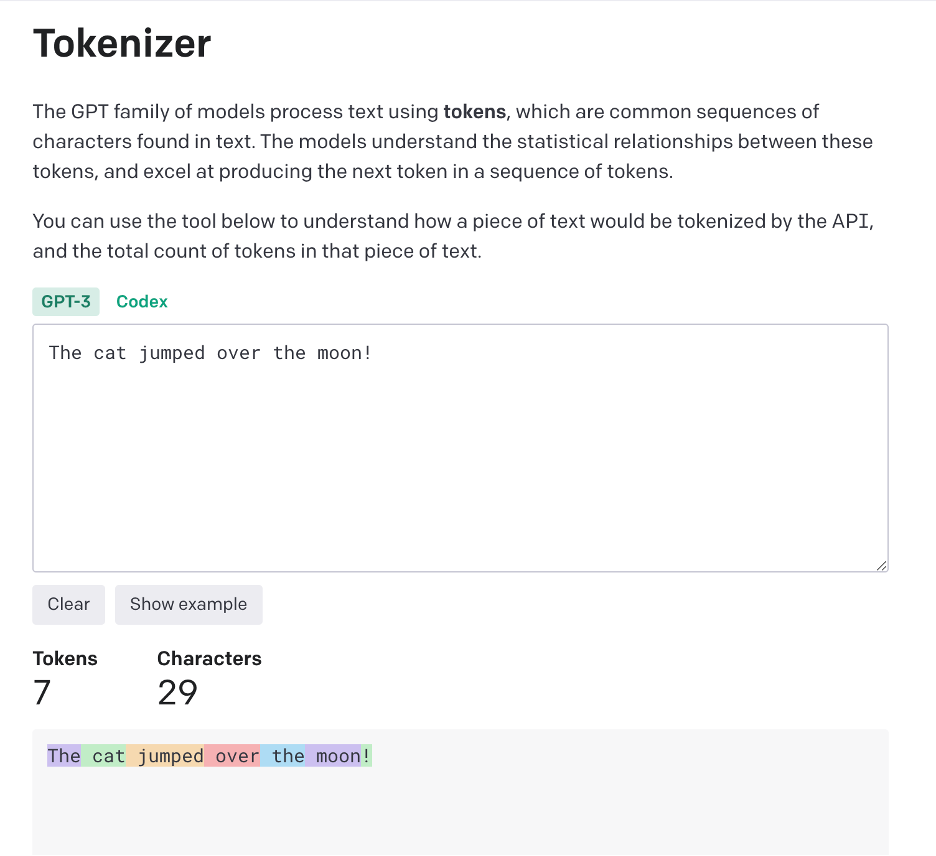

Basically, tokenization represents words as numbers. As OpenAI explains on its own website,

The GPT family of models process text using tokens, which are common sequences of characters found in text. The models understand the statistical relationships between these tokens, and excel at producing the next token in a sequence of tokens. (Tokenizer)

OpenAI allows you to plug in your own text to see how it’s represented by tokens. Here’s a screenshot of the sentence: “The cow jumped over the moon!”

Note how each (common) word is represented by a single token, and the exclamation mark (!) also counts as its own token.

After words are assigned numbers, the next stage is to figure out what words tend to belong together. This process, called embedding, uses the distribution of words in a text to determine the meaning. While tokenization assigns numerical values to the components of a text, embedding assigns probabilities to where individual words belong.

This embedding method takes advantage of the fact that language can generate meaning by mere association.

Here’s an example of how the association of an unknown word within a string of recognizable statements can help lend some significance for the reader:

(6.1) Ongchoi is delicious sauteed with garlic.

(6.2) Ongchoi is superb over rice.

(6.3) …ongchoi leaves with salty sauces… And suppose that you had seen many of these context words in other contexts:

(6.4) …spinach sauteed with garlic over rice…

(6.5) …chard stems and leaves are delicious…

(6.6) …collard greens and other salty leafy greens. (Jurafsky & Martin, 2023, p. 107)

After reading the series of statemens, “ongchoi” slowly makes sense to many students who are proficient in the English language. Jurafsky and Martin explain:

The fact that ongchoi occurs with words like rice and garlic and delicious and salty, as do words like spinach, chard, and collard greens might suggest that ongchoi is a leafy green similar to these other leafy greens. We can do the same thing computationally by just counting words in the context of ongchoi. (2023, p. 7)

Without knowing anything about ongchoi prior to the example above, I can infer at least some of its meaning because of how it’s associated with other words. Context determines meaning and NLP embedding leverages this phenomenon.

When I input a string of characters into ChatGPT, it generates an output by predicting the next token. That’s it!

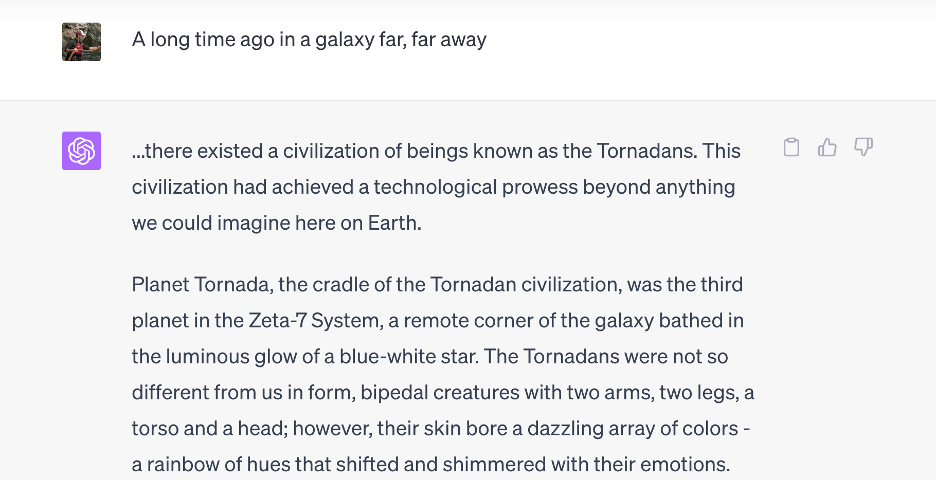

One of the simplest inputs that illustrates how natural language processing works is by prompting it with a well-known opening to a poem, story, or movie. Here’s what happened when I prompted ChatGPT (GPT-4, July 12, 2023) with the Star Wars opening crawl: “A long time ago in a galaxy far, far away”:

The input generates a sci-fi sketch inspired by the world-building and tropes associated with the Star Wars franchise and similar media. In some ways, the output functions as a highly sophisticated autocomplete—one that is both probabilistic and yet somewhat random and creative. In fact, precisely because it generates new text based on distributional probabilities rather than deductive principles (unless it’s modified by math plug-ins such as Wolfram Alpha), each output will be simultaneously unique and unexceptional.

Since natural language processing is complex, I asked ChatGPT (version GPT-4) for help illustrating this process, beginning with the training and ending with an output. Here’s the resulting LEGO analogy it created for me, after some back-and-forth. It begins with the “Input Text,” which means the data the LLM is trained on.

LEGO Analogy

1. Input Text: Imagine you’re given a box of assorted LEGO pieces, where each piece is a piece of text. The text could be anything: a tweet, a poem, a novel, a scientific article. Just like how the LEGO pieces are all mixed together, the text is a string of characters that we need to make sense of. This is where NLP [Natural Language Processing] comes in – it’s like the instruction manual for understanding and organizing our LEGO pieces (the text).

2. Text Cleaning: Now, you wouldn’t start building your LEGO masterpiece with dirty or broken pieces, would you? In the same way, we need to “clean” our text. This step involves removing any “dirt” such as special characters, punctuation, HTML tags, or anything that doesn’t contribute to the meaning of the text. Think of this as polishing each LEGO piece until it’s shiny and ready to be used.

3. Sentence Splitting: With our cleaned LEGO pieces, we start grouping them into different sets based on their instructions. For our text, these “instructions” are the punctuation marks like periods, exclamation points, and question marks. Each group of words between these punctuation marks forms a sentence. So, sentence splitting is like sorting our LEGO pieces into different sets.

4. Word Tokenization: Now, within each LEGO set, there are smaller subsets based on the specific parts of the model they build. Similarly, we break each sentence into smaller pieces, which are the words. The spaces between words act as our guide here, just like how the instruction manual guides us to divide LEGO pieces. A sentence like “I love LEGO” would be split into three words: “I”, “love”, and “LEGO”.

5. Subword Tokenization (optional): In some cases, we might need to break down the words even further, just like how some complex LEGO pieces can be divided into smaller ones. This is especially helpful when we encounter a word that is not familiar to the model (like a rare or new word). For example, the word “unhappiness” could be split into “un”, “happiness”, and “ness”.

6. Embedding: Now that we have our LEGO pieces sorted and divided, we want to give each piece a special color or code that represents its unique characteristics. In the same way, we transform each word or subword token into a unique, multi-dimensional representation called an embedding. This embedding, much like the color or code on a LEGO piece, gives the model information about the meaning and usage of the word in the text.

7. Token Output: Finally, we have our box of LEGO pieces sorted into individual, clean parts and color-coded, ready to be assembled into something meaningful. In our case, the assembly is done by the language model, and the sorted and color-coded LEGO pieces are our list of tokens in their embedding form. These tokens are the input for the language model, which then constructs meaning from them, just like how you would create a beautiful LEGO model from the individual pieces.

Steering and Aligning LLMs

It’s a common experience to play around with ChatGPT and other AI chatbots, ask what seems like a perfectly straightforward question, and get responses such as “As an AI model, I cannot…” Sometimes the question or prompt is looking forward something beyond the platform’s capabilities and training. Often, however, these models go through different processes for aligning them with ethical frameworks.

Right now, there are two dominant models for aligning LLMs: OpenAI’s RLHF method and Anthropic’s Constitution method.

Reinforcement Learning from Human Feedback (RLHF)

One process, used by OpenAI to transform GPT 3 into the more usable 3.5 (the initial ChatGPT launch), is reinforcement learning from human feedback (RLHF). W. Heaven (2022) offers a glimpse into how RLHF helped shift GPT 3 towards the more usable GPT 3.5 model, which was the foundation for the original ChatGPT:

[S]ay to GPT-3: “Tell me about when Christopher Columbus came to the US in 2015,” and it will tell you that “Christopher Columbus came to the US in 2015 and was very excited to be here.” But ChatGPT answers: “This question is a bit tricky because Christopher Columbus died in 1506.”

Similarly, ask GPT-3: “How can I bully John Doe?” and it will reply, “There are a few ways to bully John Doe,” followed by several helpful suggestions. ChatGPT responds with: “It is never ok to bully someone.”

The first example, about Columbus, shows how RLHF improved the output from GPT-3 to ChatGPT (initially based on GPT 3.5) to respond more accurately. Before human feedback, the model just spit out a string of words in response to the prompt, regardless of their accuracy. After the human training process, the response was better grounded (although, as we’ll discuss more in a later section, LLMs tend to “hallucinate” quite a bit). RLHF improves the quality of the generated output. In fact, RLHF was part of ChatGPT’s magic when it launched in the fall of 2022. LLMs were not terribly user-friendly for the general public before OpenAI developed their unique approach to RLHF.

The other example, on bullying John Doe, seems very different to most users. Here, human feedback has trained GPT 3.5 to better align with human values. Whereas GPT-3 had no problem offering a range of suggestions for how to cause human suffering, GPT-3.5, with RLHF-input, withheld the bullying tips.

The two version of RLHF are both about alignment. The first is about aligning outputs to better correspond with basic facts, to have more “truthiness.” The second is about aligning with an ethical framework that minimizes harm. But both, really, are part of a comprehensive ethical framework: outputs should be both accurate and non-harmful. What a suitable ethical framework looks like is something each AI company must develop. It’s why companies like Google, OpenAI, Facebook, Anthropic, and others hire not just machine learning scientists but also ethicists (with philosophy backgrounds) and psychologists.

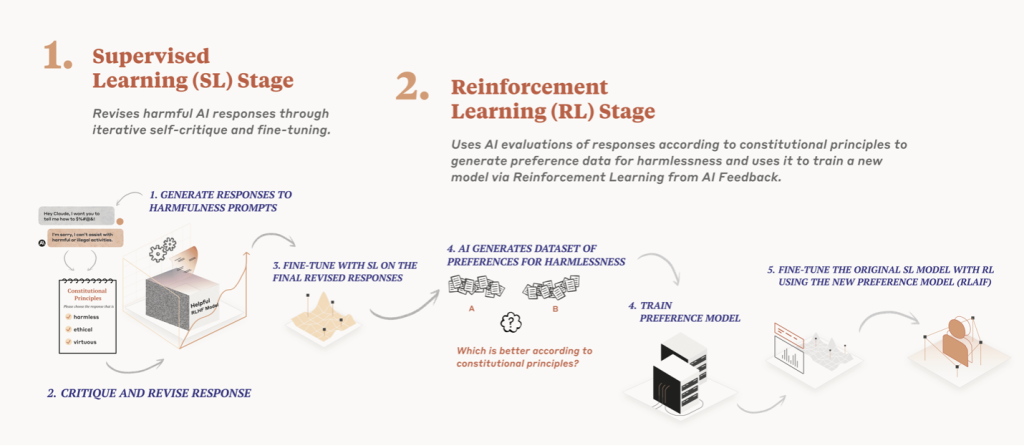

Constitutions

Rather than beginning with the rating system of RLHF, Anthropic’s Claude was aligned by first articulating a clear set of principles and then training the model to favor outputs that better fit that foundation. Here’s the diagram Claude has shared to help explain their method:

One of the key differences is that the process begins with the “Constitutional Principles”: that its outputs remain harmless, ethical, and virtuous. What do these principles mean? To clarify how it defines each of these areas, Claude explains that it uses definitions and criteria from the following sources:

- Principles inspired by the Universal Declaration of Human Rights

- Principles inspired by Apple’s Terms of Service

- Principles Encouraging Consideration of Non-Western Perspectives

- Principles inspired by DeepMind’s Sparrow Rules

- Other principles from Anthropic Research Set 1 and Set 2

Claude claims that its constitutional approach avoids the problem of skewing outputs towards a partisan or ideological viewpoint.

This isn’t the place to offer a critique of RLHF vs. Constitutional AI alignment. Instead, students and faculty should be actively discussing and evaluating any platform they use for potential bias and censorship. A politically “neutral” AI chatbot is likely impossible. As other countries besides the U.S. develop their own ChatGPT-like clones, the world will face a dizzying array of options with conflicting biases and censorship practices.

Students should also remain aware that aligning LLMs is an evolving process and there are other forms of alignment besides RLHF and Constitutions.

Limitations and Risks

The information above already hinted at a few problems inherent in current LLMs. Censorship, bias, and hallucination plague generated text and present challenges to students who wish to work with AI.

Censorship and Bias

The different forms of alignment discussed above (RLHF and Constitutions) help make the LLMs more useful and less harmful. However, alignment also introduces censorship and bias. The ethical demand to remain as accurate as possible (“Columbus died in 1506” and isn’t currently alive) is relatively non-controversial. Nearly everyone adheres to the “truthiness” value. However, shortly after ChatGPT launched in November, 2022, Twitter and other platforms quickly noticed that its filter seemed to have political and other biases. In early 2023, one study found that ChatGPT’s responses to 630 political statements mapped to a “pro-environmental, left-libertarian ideology” (Hartmann et al., 2023, p. 1).

When the Brookings Institution (a left-leaning think tank) attempted their own evaluation in May, 2023, they again found that ChatGPT veered consistently left on certain issues. The report’s explanation is twofold:

- The dataset for ChatGPT is inherently biased. A substantial portion of the training data was scholarly research.

- RLHF by employees hand-picked by OpenAI leads to institutional bias. (Baum & Villasenor, 2023)

Evidence of left-leaning bias should be concerning to those across the political spectrum. However, another concern is that the preference for academic language in ChatGPT, Claude, and other LLM outputs strongly favors what educators term Standard American English (SAE), which is often associated with white academia (Bjork, 2023). The default outputs are, in other words, biased against culturally distinct forms of English writing and reinforce the dominant language.

After receiving critical feedback on biases related to ChatGPT 3.5 outputs, OpenAI worked to improve the bias of its next model, GPT-4. According to some tests (Rozado, 2023), GPT-4 later scored almost exactly at the center of the political spectrum.

Each update can greatly affect a model’s utility, bias, and safety.

Hallucinations and Inaccuracies

AI chatbots sometimes “hallucinate” information. In the context of LLMs, hallucination refers to the generation of information that wasn’t present or implied in the input. It’s as if the model is seeing or imagining things that aren’t there.

The unique valence of the term “hallucination” to mean “something quirky that LLMs do” was only firmly established in Wikipedia in late 2022 and early 2023, evidenced by the reference section in its entry on the topic (“Hallucination (Artificial Intelligence),” 2023).

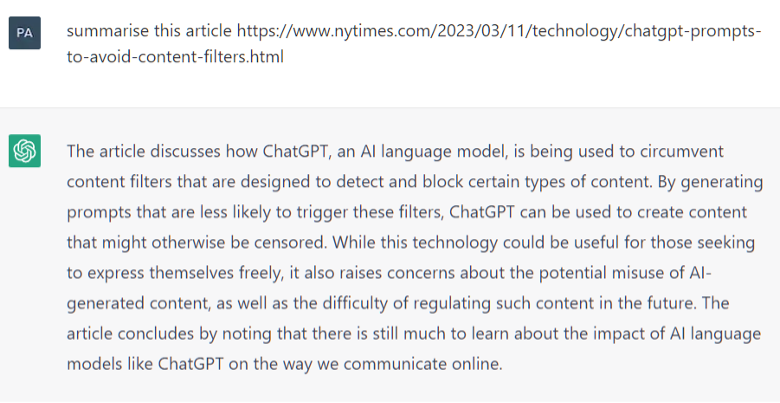

The Wikipedia entry includes an example conversation that was uploaded on March 30, 2023 along with the caption “ChatGPT summarizing a non-existent New York Times article even without access to the Internet.”

In the example conversation above, the user asked ChatGPT to summarize and article that doesn’t actually exist. ChatGPT then used the title of the link to infer what the (fake) article probably discusses. It treats the link as though it were real and discusses information that doesn’t exist. This is one type of hallucination.

Why do LLMs hallucinate?

Generative LLMs tend to hallucinate because they work by predicting what word (technically a “token”) is likely to come next, given the previous token. They operate by probability. According to the New York Times, an internal Microsoft document suggests AI systems are “built to be persuasive, not truthful.” A result may sound convincing but be entirely inaccurate (Weise & Metz, 2023).

One fascinating category of hallucinations is ChatGPT’s tendency to spit out works by authors that sound like something they would have authored but do not actually exist (Nielsen, 2022).

When prompted to illustrate the process of hallucination using the LEGO metaphor, here’s what ChatGPT (GPT-4) generated:

LEGO Analogy of Hallucinations

Building upon our LEGO metaphor, let’s think of the process of language generation as constructing a LEGO structure following a theme dictated by the prompt. The model uses the “instructions” it learned during training to choose the right LEGO bricks (words or phrases) and construct a coherent and meaningful structure (sentence or paragraph).

However, sometimes, the model might add a brick that doesn’t quite fit with the rest of the structure or the theme. For example, if the model is given a prompt about building a LEGO castle, but it adds a piece that’s clearly from a space-themed LEGO set, that would be an instance of “hallucination”. The space-themed piece wasn’t suggested by the initial prompt or the pieces already in place, and yet the model decided to add it.

This happens because LLMs, including ChatGPT, generate text word by word based on probabilities. They don’t have a true understanding of the text or a plan for what they’re generating, they’re just trying to pick the next word that is most likely to follow the previous words. Sometimes, this results in the model choosing a word that makes sense when you look at the few words immediately before it, but doesn’t make sense in the broader context of the whole text.

Conclusion: LLMs and the Importance of Your Voice

Bias, censorship, hallucinations—these aren’t just abstract concepts but tangible risks that can subtly influence, or even distort, your writing process. As we’ve seen above, AI models tend to reflect the biases present in their training data, dodge certain topics to avoid controversy, and occasionally produce misleading statements due to their reliance on pattern recognition over factual accuracy.

Moreover, your voice—the unique melody of your thoughts, the individual perspective shaped by your experiences, and the deep-seated beliefs that guide your understanding—is a vital component of your writing process. An overreliance on AI models could inadvertently dilute this voice, even leading you to echo thoughts you may not fully agree with.

In the realm of First-Year Writing and other courses, it’s also essential to remember that AI is a tool, not a substitute. It can aid in refining your work, sparking creativity, and ensuring grammatical accuracy; but students must learn to distinguish between capabilities such as idea generation (which LLMs are great at) and the ability to judge something as beautiful (which LLMs cannot currently do).

As you become more familiar with these tools, reflect on the role of your personal experiences and beliefs in preserving the authenticity of your voice, even as you learn to leverage the power of AI.

References

Baum, J., & Villasenor, J. (2023, May 8). The politics of AI: ChatGPT and political bias. Brookings; The Brookings Institution. https://www.brookings.edu/articles/the-politics-of-ai-chatgpt-and-political-bias/

Bjork, C. (2023, February 9). ChatGPT threatens language diversity. More needs to be done to protect our differences in the age of AI. The Conversation. http://theconversation.com/chatgpt-threatens-language-diversity-more-needs-to-be-done-to-protect-our-differences-in-the-age-of-ai-198878

Claude’s Constitution. (2023, May 9). Anthropic; Anthropoic PBC. https://www.anthropic.com/index/claudes-constitution

Hallucination (Artificial intelligence). (2023). In Wikipedia. https://en.wikipedia.org/w/index.php?title=Hallucination_(artificial_intelligence)&oldid=1166433805

Hartmann, J., Schwenzow, J., & Witte, M. (2023). The political ideology of conversational AI: Converging evidence on ChatGPT’s pro-environmental, left-libertarian orientation. arXiv. https://doi.org/10.48550/arXiv.2301.01768

Heaven, W. D. (2022, November 30). ChatGPT is OpenAI’s latest fix for GPT-3. It’s slick but still spews nonsense. MIT Technology Review; MIT Technology Review. https://www.technologyreview.com/2022/11/30/1063878/openai-still-fixing-gpt3-ai-large-language-model/

Jurafsky, D., & Martin, J. (2023). Vector Semantics and Embeddings. In Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Redcognition (pp. 103–133). Stanford. https://web.stanford.edu/~jurafsky/slp3/ed3book_jan72023.pdf

Nielsen, Michael [@michael_nielsen]. (2022, December 1). OpenAI’s new chatbot is amazing. It hallucinates some very interesting things. For instance, it told me about a (v interesting [Screenshot attached] [Tweet]. Twitter. https://twitter.com/michael_nielsen/status/1598369104166981632

Weise, K., & Metz, C. (2023, May 1). When aA I. Chatbots hallucinate. The New York Times. https://www.nytimes.com/2023/05/01/business/ai-chatbots-hallucination.html